In the world of AI-powered development tools, a critical architectural decision looms large: which programming language should form the foundation of your system? Traditionally, teams have faced a seemingly binary choice between Python—with its rich AI ecosystem—and Node.js—with its excellent protocol handling and IDE integration capabilities.

But what if you didn’t have to choose?

Enter hybrid architectures: sophisticated systems that strategically combine Python and Node.js to leverage the strengths of each language while mitigating their respective weaknesses. This approach is gaining traction among teams building advanced developer tools, particularly those that integrate AI capabilities with IDE extensions.

In this comprehensive guide, we’ll explore how hybrid architectures work, their key benefits, implementation strategies, and real-world success stories. You’ll discover why the question isn’t “Python or Node.js?” but rather “How can we best combine Python and Node.js?”

The Case for Hybrid Architectures

Before diving into implementation details, let’s understand why hybrid architectures have become increasingly popular for AI-powered development tools:

The Limitations of Single-Language Approaches

Python-Only Limitations

Python excels at AI and machine learning but faces challenges with:

- Protocol Handling: Less elegant handling of binary protocols like STDIO

- IDE Integration: More complex integration with modern IDEs like VS Code

- Deployment Size: Larger deployment footprints due to ML dependencies

- Concurrency Model: Less natural for handling many concurrent connections

Node.js-Only Limitations

Node.js shines at protocol handling and IDE integration but struggles with:

- AI Capabilities: Limited native AI and ML libraries

- Vector Operations: Less efficient for mathematical operations

- ML Model Integration: Often requires external services or APIs

- Scientific Computing: Weaker ecosystem for data processing

The Hybrid Advantage

A hybrid architecture allows you to:

- Use each language for its strengths: Node.js for protocol and IDE integration, Python for AI and ML

- Optimize performance: Handle I/O in Node.js and computation in Python

- Leverage both ecosystems: Access the best libraries from both worlds

- Scale components independently: Allocate resources based on workload characteristics

- Distribute expertise: Allow team members to work in their preferred language

Hybrid Architecture Patterns

Several patterns have emerged for combining Python and Node.js in developer tools:

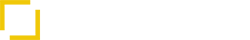

1. Frontend/Backend Split

The most common pattern separates responsibilities cleanly:

- Node.js Frontend: Handles IDE communication, protocol parsing, and user interaction

- Python Backend: Manages AI models, vector operations, and knowledge retrieval

This approach is particularly effective for VS Code extensions that need RAG capabilities.

2. Microservices Architecture

For larger systems, a microservices approach allows for more granular separation:

- Node.js Services: Protocol handling, IDE integration, request routing, caching

- Python Services: Model serving, embedding generation, vector search, document processing

This pattern works well for teams building comprehensive development platforms.

3. Embedded Python

For simpler tools, Node.js can directly spawn Python processes:

- Node.js Main Process: Controls the overall application flow

- Python Child Processes: Execute specific AI or ML tasks on demand

This approach minimizes complexity while still leveraging Python’s AI capabilities.

Implementation Strategies

Let’s explore practical implementation strategies for hybrid architectures:

Component Separation

The first step is determining which components belong in which language:

Node.js Components

// Example: Node.js MCP server component

const express = require('express');

const { spawn } = require('child_process');

const app = express();

// Handle STDIO protocol

class StdioHandler {

constructor() {

process.stdin.on('data', this.handleStdinData.bind(this));

}

handleStdinData(chunk) {

// Protocol handling logic

// ...

// Once a complete message is received

this.processMessage(message);

}

processMessage(message) {

// Forward to Python backend

this.forwardToPythonBackend(message)

.then(response => {

// Send response back to IDE

this.sendResponse(response);

})

.catch(error => {

// Handle error

this.sendErrorResponse(error);

});

}

forwardToPythonBackend(message) {

// HTTP request to Python backend

return fetch('http://localhost:5000/process', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(message)

}).then(res => res.json());

}

sendResponse(response) {

// Format and send response via STDIO

const content = JSON.stringify(response);

const contentBytes = Buffer.from(content, 'utf8');

const header = `Content-Length: ${contentBytes.length}\r\nContent-Type: application/json; charset=utf-8\r\n\r\n`;

process.stdout.write(header);

process.stdout.write(contentBytes);

}

}

// Initialize handler

const handler = new StdioHandler();

// Also expose HTTP API for direct integration

app.use(express.json());

app.post('/process', (req, res) => {

// Process request and forward to Python backend

// ...

});

app.listen(3000, () => {

console.log('Node.js frontend listening on port 3000');

});

Python Components

# Example: Python backend for AI processing

from flask import Flask, request, jsonify

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

from transformers import pipeline

app = Flask(__name__)

# Initialize AI components

embeddings = OpenAIEmbeddings()

vectorstore = Chroma(embedding_function=embeddings)

code_generator = pipeline("text-generation", model="bigcode/starcoder")

@app.route('/process', methods=['POST'])

def process_request():

data = request.json

# Extract context and query

context = data.get('params', {}).get('context', {})

code = context.get('document', '')

language = context.get('language', 'python')

query = data.get('params', {}).get('query', '')

# Retrieve relevant documentation

docs = vectorstore.similarity_search(code + " " + query, k=3)

# Format retrieved documentation

doc_context = "\n".join([doc.page_content for doc in docs])

# Generate code with context

prompt = f"Code: {code}\n\nDocumentation: {doc_context}\n\nTask: {query}\n\n"

result = code_generator(prompt, max_length=200)[0]['generated_text']

# Return response

return jsonify({

"content": result,

"language": language,

"references": [{"source": doc.metadata.get("source", "Unknown")} for doc in docs]

})

if __name__ == '__main__':

app.run(port=5000)

Inter-Process Communication

The communication between Node.js and Python components is critical for hybrid architectures. Several approaches are available:

1. HTTP/REST API

The simplest approach uses HTTP for communication:

// Node.js: Making HTTP requests to Python backend

async function callPythonBackend(data) {

try {

const response = await fetch('http://localhost:5000/process', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(data)

});

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

return await response.json();

} catch (error) {

console.error('Error calling Python backend:', error);

throw error;

}

}

2. Message Queues

For more robust communication, message queues can be used:

// Node.js: Using RabbitMQ for communication

const amqp = require('amqplib');

async function setupMessageQueue() {

const connection = await amqp.connect('amqp://localhost');

const channel = await connection.createChannel();

// Set up request queue

await channel.assertQueue('requests', { durable: true });

// Set up response queue

await channel.assertQueue('responses', { durable: true });

// Listen for responses

channel.consume('responses', (msg) => {

if (msg !== null) {

const response = JSON.parse(msg.content.toString());

// Process response

processResponse(response);

channel.ack(msg);

}

});

return channel;

}

async function sendRequest(channel, request) {

// Add correlation ID for tracking

request.correlationId = generateUuid();

// Send request

channel.sendToQueue('requests', Buffer.from(JSON.stringify(request)), {

correlationId: request.correlationId

});

// Return promise that will resolve when response is received

return new Promise((resolve) => {

responseHandlers[request.correlationId] = resolve;

});

}

# Python: Consuming from RabbitMQ

import pika

import json

def setup_message_queue():

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

# Set up request queue

channel.queue_declare(queue='requests', durable=True)

# Set up response queue

channel.queue_declare(queue='responses', durable=True)

# Process requests

channel.basic_consume(

queue='requests',

on_message_callback=process_request,

auto_ack=True

)

print("Waiting for requests...")

channel.start_consuming()

def process_request(ch, method, properties, body):

request = json.loads(body)

# Process the request

result = process_ai_request(request)

# Send response back

ch.basic_publish(

exchange='',

routing_key='responses',

properties=pika.BasicProperties(

correlation_id=properties.correlation_id

),

body=json.dumps(result)

)

3. Child Process Communication

For simpler setups, direct child process communication works well:

// Node.js: Spawning Python process

const { spawn } = require('child_process');

function createPythonProcess() {

const pythonProcess = spawn('python', ['backend.py']);

// Handle stdout

pythonProcess.stdout.on('data', (data) => {

try {

const response = JSON.parse(data.toString());

// Process response

processResponse(response);

} catch (error) {

console.error('Error parsing Python response:', error);

}

});

// Handle stderr

pythonProcess.stderr.on('data', (data) => {

console.error(`Python error: ${data}`);

});

// Handle process exit

pythonProcess.on('close', (code) => {

console.log(`Python process exited with code ${code}`);

// Restart if needed

if (code !== 0) {

createPythonProcess();

}

});

return pythonProcess;

}

function sendToPython(pythonProcess, request) {

// Add newline to ensure Python's readline gets the full message

pythonProcess.stdin.write(JSON.stringify(request) + '\n');

}

# Python: Reading from stdin and writing to stdout

import sys

import json

def main():

# Process input lines

for line in sys.stdin:

try:

request = json.loads(line)

# Process the request

result = process_ai_request(request)

# Send response

sys.stdout.write(json.dumps(result) + '\n')

sys.stdout.flush()

except json.JSONDecodeError:

sys.stderr.write("Error: Invalid JSON input\n")

sys.stderr.flush()

except Exception as e:

sys.stderr.write(f"Error: {str(e)}\n")

sys.stderr.flush()

if __name__ == "__main__":

main()

Deployment Considerations

Deploying hybrid architectures requires careful planning:

Container-Based Deployment

Docker Compose is ideal for hybrid deployments:

# docker-compose.yml

version: '3'

services:

nodejs-frontend:

build:

context: ./nodejs

dockerfile: Dockerfile

ports:

- "3000:3000"

environment:

- PYTHON_BACKEND_URL=http://python-backend:5000

depends_on:

- python-backend

python-backend:

build:

context: ./python

dockerfile: Dockerfile

ports:

- "5000:5000"

volumes:

- ./data:/app/data

Node.js Dockerfile

# Node.js Dockerfile FROM node:16-slim WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3000 CMD ["node", "server.js"]

Python Dockerfile

# Python Dockerfile

FROM python:3.9-slim

WORKDIR /app

# Install system dependencies

RUN apt-get update && apt-get install -y \

build-essential \

&& rm -rf /var/lib/apt/lists/*

# Install Python dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

EXPOSE 5000

CMD ["python", "app.py"]

Real-World Hybrid Architecture: Enterprise IDE Extension

To illustrate the power of hybrid architectures, let’s examine a real-world implementation powering an enterprise IDE extension.

Architecture Overview

This system provides AI-powered code assistance for a large enterprise with proprietary frameworks and strict security requirements:

- VS Code Extension (Node.js):

- Integrates natively with VS Code

- Handles user interactions and UI

- Manages extension lifecycle

- MCP Server (Node.js):

- Processes STDIO protocol

- Routes requests to appropriate services

- Handles streaming responses

- Manages caching for performance

- AI Processing Service (Python):

- Interfaces with vector database

- Performs embedding generation

- Executes RAG queries

- Generates code completions

- Document Processing Pipeline (Python):

- Scrapes internal documentation

- Processes code repositories

- Generates and updates embeddings

- Maintains vector database

Communication Flow

- Developer types in VS Code and triggers code completion

- VS Code extension captures context and sends to MCP server via STDIO

- MCP server processes request and forwards to Python AI service via HTTP

- Python service retrieves relevant documentation from vector database

- Python service generates code completion and returns to MCP server

- MCP server streams response back to VS Code extension

- VS Code extension displays completion to developer

Performance Metrics

This hybrid architecture delivers impressive performance:

- Average response time: 150ms for simple completions

- Context processing: Handles up to 100,000 tokens of context

- Concurrent requests: Supports 30+ developers simultaneously

- Documentation coverage: Indexes 10+ million lines of internal code and docs

Key Benefits Realized

The enterprise realized several benefits from this hybrid approach:

- Development Efficiency: 40% reduction in time spent implementing standard patterns

- Knowledge Transfer: New developers reached productivity 60% faster

- Code Quality: 35% reduction in bugs related to internal API usage

- Security Compliance: All processing remains on-premises, meeting security requirements

Why Choose When You Can Have Both?

The traditional debate between Python and Node.js for AI-powered development tools presents a false dichotomy. By adopting a hybrid architecture, you can:

- Optimize Every Component: Use each language for what it does best

- Scale Independently: Allocate resources based on workload characteristics

- Leverage Both Ecosystems: Access the best libraries from both worlds

- Future-Proof Your Architecture: Adapt to evolving requirements more easily

- Utilize Diverse Team Skills: Allow team members to work in their preferred language

Implementation Considerations

If you’re considering a hybrid architecture, keep these factors in mind:

When Hybrid Makes Sense

A hybrid approach is particularly valuable when:

- Your tool requires both sophisticated AI and responsive IDE integration

- You need to process large amounts of documentation or code

- Your team has expertise in both Python and Node.js

- Performance and scalability are critical requirements

- You want to future-proof against evolving AI capabilities

When to Keep It Simple

However, simpler approaches might be better when:

- Your tool has minimal AI requirements (e.g., calling external APIs only)

- You have a small team with expertise in only one language

- Deployment simplicity is a primary concern

- Your performance requirements are modest

Ready to Implement a Hybrid Architecture?

At Services Ground , we specialize in designing and implementing hybrid architectures for AI-powered development tools. Our team of experts can help you:

- Assess your specific requirements and constraints

- Design an optimal architecture for your needs

- Implement efficient inter-process communication

- Set up robust deployment pipelines

- Train your team on maintenance best practices

Our clients typically see a 30-50% improvement in development productivity after implementing our hybrid architecture solutions.

Ready to implement a hybrid architecture for your developer tools? Our team can help – schedule a call today!