Demystifying Artificial Intelligence

In 2025, Artificial Intelligence (AI) is no longer a futuristic concept confined to science fiction; it’s a tangible force reshaping industries, automating tasks, and enhancing our daily lives in ways we’re only just beginning to comprehend. For many, the world of AI can seem daunting, filled with complex jargon and rapidly evolving technologies. But fear not! This guide is designed to cut through the complexity, offering a clear, concise, and accessible introduction to the fundamentals of AI in 2025. Whether you’re a curious individual, a business owner looking to understand its potential, or a student embarking on a new field, understanding the basics of AI is no longer optional—it’s essential for navigating the modern world.

At its core, AI is about creating machines that can perform tasks that typically require human intelligence. This includes everything from understanding natural language and recognizing images to making decisions and solving complex problems. The journey of AI has been a long and fascinating one, evolving from early theoretical concepts to the powerful, practical applications we see today. This evolution has been driven by breakthroughs in computational power, access to vast amounts of data, and innovative algorithms, particularly in the realm of machine learning and deep learning.

This blog post will serve as your foundational stepping stone into the world of AI. We’ll explore what AI truly is, delve into its fascinating history, and break down the core terminology that will empower you to confidently engage with the AI landscape. We’ll also touch upon the ethical considerations that are paramount as AI becomes increasingly integrated into our society. By the end of this guide, you’ll have a solid understanding of the building blocks of AI and be well-equipped to explore its more advanced applications.

What is AI? Core Definitions and Types

To truly grasp the essence of AI, it’s important to start with a clear definition. Artificial Intelligence, in its broadest sense, refers to the simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. This encompasses a wide range of capabilities, from learning and problem-solving to perception and language understanding.

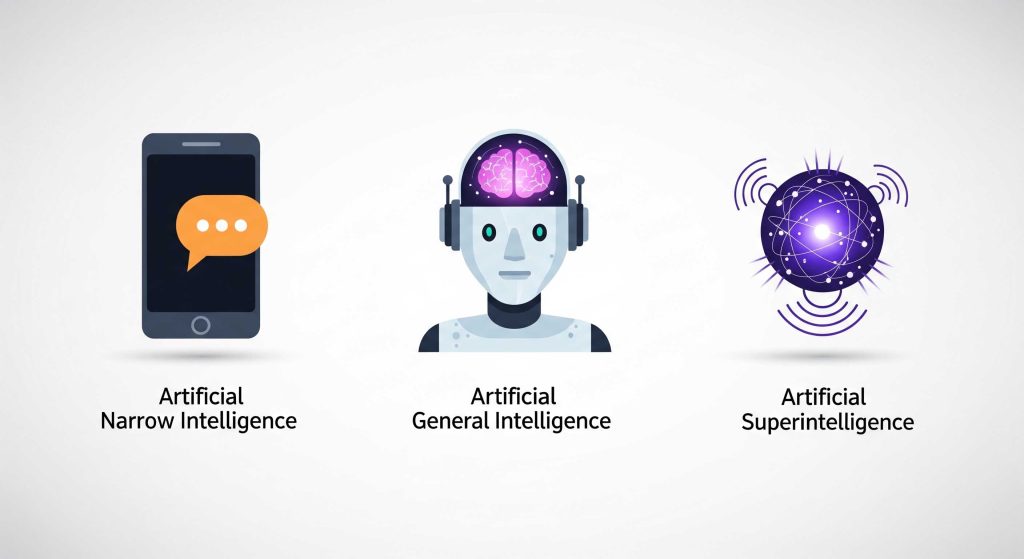

Within the vast umbrella of AI, there are several key distinctions and types:

1. Artificial Narrow Intelligence (ANI) / Weak AI

This is the only type of AI that currently exists. ANI is designed and trained for a particular task. Examples include virtual assistants like Siri or Alexa, recommendation engines on streaming platforms, image recognition software, and spam filters. These systems excel at their specific functions but cannot perform tasks outside their programmed scope. They don’t possess genuine intelligence or consciousness; they simply simulate intelligent behavior within a narrow domain.

2. Artificial General Intelligence (AGI) / Strong AI

AGI refers to AI that possesses the ability to understand, learn, and apply intelligence to any intellectual task that a human being can. This would involve consciousness, self-awareness, and the ability to solve unfamiliar problems, learn new skills, and reason across a wide range of domains. AGI is still largely theoretical and is a subject of ongoing research and debate within the AI community.

3. Artificial Superintelligence (ASI)

ASI is a hypothetical AI that would surpass human intelligence in virtually every field, including scientific creativity, general wisdom, and social skills. This level of AI would be capable of self-improvement, leading to an intelligence explosion where it rapidly advances beyond human comprehension. Like AGI, ASI remains a theoretical concept.

Machine Learning vs. Deep Learning: Understanding the Nuances

Often, the terms

Machine Learning (ML) and Deep Learning (DL) are often used interchangeably with AI, but they are actually subsets of AI.

- Machine Learning (ML): This is a subset of AI that enables systems to learn from data without being explicitly programmed. Instead of hard-coding rules, ML algorithms are fed large amounts of data, and they learn to identify patterns and make predictions or decisions based on those patterns. For example, an ML algorithm can learn to distinguish between images of cats and dogs by analyzing thousands of labeled images.

- Deep Learning (DL): This is a subfield of machine learning that uses artificial neural networks with multiple layers (hence “deep”) to learn from data. Inspired by the structure and function of the human brain, deep learning models can process vast amounts of data and uncover intricate patterns, making them particularly effective for tasks like image recognition, natural language processing, and speech recognition. The “deep” in deep learning refers to the number of layers in the neural network; more layers allow the network to learn more complex features.

In essence, AI is the overarching concept, ML is a method to achieve AI, and DL is a specific technique within ML that has driven many of the recent advancements in AI.

History of AI: A Journey Through Innovation

The history of AI is a fascinating narrative of human ingenuity, marked by periods of great optimism, followed by “AI winters” where funding and interest waned, only to be rekindled by new breakthroughs. Understanding this journey helps us appreciate the current state of AI and anticipate its future trajectory.

Early Beginnings (1940s-1950s)

The seeds of AI were sown in the mid-20th century with the advent of electronic computers. Pioneering thinkers like Alan Turing explored the concept of machine intelligence, famously proposing the Turing Test in 1950 as a criterion for intelligence. The term “artificial intelligence” itself was coined in 1956 at the Dartmouth Workshop, a seminal event that brought together leading researchers to discuss the possibility of creating machines that could simulate human intelligence.

The Golden Years & First AI Winter (1950s-1970s)

The initial period saw significant optimism and funding. Early AI programs demonstrated impressive capabilities in problem-solving and symbolic reasoning, such as Newell and Simon’s Logic Theorist (1956) and ELIZA (1966), a natural language processing program. However, the limitations of early computers and the complexity of real-world problems soon became apparent, leading to a period of reduced funding and interest known as the “first AI winter.”

Expert Systems & Second AI Winter (1980s-1990s)

The 1980s saw a resurgence of AI with the rise of “expert systems,” which mimicked the decision-making ability of a human expert in a specific domain. These systems found commercial success in areas like medical diagnosis and financial planning. However, their reliance on manually entered rules and their inability to learn from experience led to another period of disillusionment and the “second AI winter.”

The Rise of Machine Learning (2000s-Present)

The turn of the millennium marked a pivotal shift. Instead of trying to hard-code intelligence, researchers began focusing on machine learning, where algorithms learn from data. The availability of vast datasets and increased computational power, particularly with the rise of GPUs, fueled this revolution. Key milestones include:

- 2006: Geoffrey Hinton’s work on deep belief networks reignited interest in neural networks.

- 2012: AlexNet, a deep convolutional neural network, achieved a breakthrough in image recognition, significantly outperforming previous methods.

- 2016: AlphaGo, a Google DeepMind AI, defeated the world champion of Go, a game far more complex than chess, showcasing the power of deep reinforcement learning.

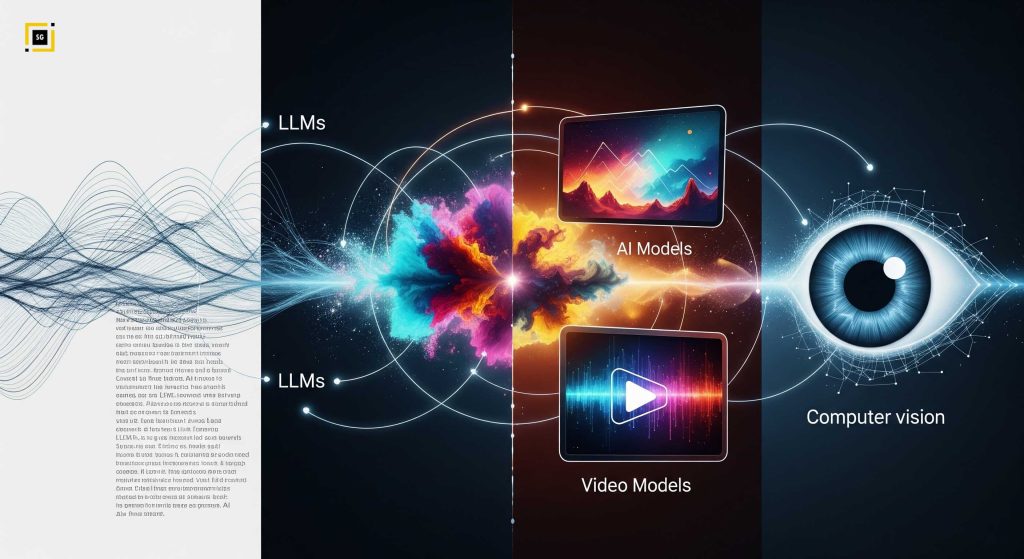

The AI Boom of the 2020s

Today, we are in an unprecedented era of AI advancement, largely driven by the development of Large Language Models (LLMs) and generative AI. Models like OpenAI’s GPT series, Google’s Gemini, and Anthropic’s Claude have demonstrated remarkable capabilities in understanding and generating human-like text, images, and even video. This current boom is characterized by:

- Scaling Laws: The discovery that increasing model size and data leads to predictable performance improvements.

- Instruction Tuning & RLHF: Techniques that make models better at following instructions and aligning with human preferences.

- Context Window Expansion: Models can now process and understand much larger amounts of information at once.

- Multimodal Capabilities & Tool Use: AI models are becoming increasingly versatile, able to understand and generate across different data types and interact with external tools.

This rich history underscores that AI is not a static field but a continuously evolving one, driven by relentless innovation and a persistent quest to augment human capabilities.

Core Terminology: Your AI Glossary

Navigating the AI landscape requires a grasp of its fundamental vocabulary. Here are some essential terms you’ll encounter:

- Model: In AI, a model is a program or algorithm that has been trained on a dataset to recognize patterns, make predictions, or generate content. It’s the result of the learning process.

- Training: The process of feeding data to an AI model so it can learn patterns and relationships. During training, the model adjusts its internal parameters to minimize errors in its predictions or outputs.

- Inference: The process of using a trained AI model to make predictions or generate outputs on new, unseen data. For example, once an image recognition model is trained, using it to identify objects in a new photo is an act of inference.

- Parameters: These are the internal variables or weights within an AI model that are learned during the training process. The number of parameters can range from millions to trillions, and generally, more parameters allow a model to learn more complex patterns.

- Fine-tuning: A process where a pre-trained AI model (one that has already learned general patterns from a large dataset) is further trained on a smaller, more specific dataset. This allows the model to adapt its knowledge to a particular task or domain, improving its performance for that specific use case.

- Context Window: For language models, this refers to the amount of text (measured in tokens) that the model can process and consider at any given time. A larger context window means the model can understand and generate longer, more coherent pieces of text, maintaining consistency across extended conversations or documents.

- Tokens: The basic units of text that AI models process. A token can be a word, part of a word, or even a single character, depending on the model. For English, a token is roughly equivalent to 3/4 of a word.

- Prompt Engineering: The art and science of crafting effective inputs (prompts) for AI models to guide their behavior and elicit desired outputs. This involves understanding how models interpret instructions and structuring prompts to achieve specific results.

- Generative AI: A type of AI that can create new content, such as text, images, audio, or video, that is similar to data it was trained on but is not an exact copy. This is in contrast to discriminative AI, which focuses on classification or prediction.

- Multimodal AI: AI systems that can process and generate content across multiple types of data, such as text, images, audio, and sometimes video. For example, a multimodal model might take an image and a text prompt to generate a descriptive caption.

- AI Agent: An AI system that can act autonomously to achieve a specific goal. Unlike simple models that respond to prompts, agents can plan, use tools, interact with environments, and persist in their efforts until a task is completed.

Understanding these terms will provide a solid foundation for comprehending the discussions around AI models and their applications.

Ethical Considerations: Navigating the Responsible Development of AI

As AI becomes increasingly powerful and integrated into society, it brings with it a host of ethical considerations that demand careful attention. Responsible AI development is not just a technical challenge but a societal imperative. Here are some of the key ethical concerns:

1. Bias and Fairness

AI models learn from the data they are trained on. If this data reflects existing societal biases (e.g., racial, gender, socioeconomic), the AI model can perpetuate and even amplify these biases in its outputs and decisions. This can lead to unfair or discriminatory outcomes in areas like hiring, loan applications, criminal justice, and healthcare. Ensuring fairness requires diverse and representative training data, careful model design, and continuous monitoring for biased behavior.

2. Transparency and Explainability (XAI)

Many advanced AI models, particularly deep learning networks, are often referred to as “black boxes” because it can be difficult to understand how they arrive at a particular decision or prediction. This lack of transparency can be problematic, especially in critical applications where accountability is essential. Explainable AI (XAI) is a field of research dedicated to developing methods that make AI systems more understandable and interpretable, allowing humans to comprehend their reasoning and trust their outputs.

3. Privacy and Data Security

AI models often require vast amounts of data for training, much of which can be personal or sensitive. This raises significant privacy concerns regarding how data is collected, stored, used, and protected. Ensuring robust data security measures and adhering to privacy regulations (like GDPR or CCPA) are crucial to prevent misuse and maintain public trust. There’s also the risk of models inadvertently memorizing and revealing sensitive information from their training data.

4. Safety and Control

As AI systems become more autonomous and capable, ensuring their safety and maintaining human control becomes paramount. This involves designing AI systems that operate within defined boundaries, can be reliably shut down or overridden, and do not cause unintended harm. For highly autonomous systems, the challenge of ensuring alignment with human values and intentions is a complex and ongoing area of research.

5. Job Displacement and Economic Impact

AI’s ability to automate tasks, including those requiring cognitive skills, raises concerns about potential job displacement across various sectors. While AI is also expected to create new jobs and enhance productivity, the transition period and the need for workforce retraining are significant societal challenges that require proactive planning and policy development.

6. Misinformation and Malicious Use

Generative AI models, while powerful tools for creativity and productivity, can also be misused to create highly realistic but fabricated content (deepfakes, fake news, propaganda). This poses a serious threat to information integrity and can be used for malicious purposes. Developing robust detection mechanisms and promoting digital literacy are critical countermeasures.

7. Intellectual Property and Copyright

When AI models generate new content (text, images, music), questions arise regarding ownership and copyright. Who owns the content created by an AI? What if the AI’s output is too similar to existing copyrighted material it was trained on? These are complex legal and ethical questions that are still being debated and will likely require new legal frameworks.

Addressing these ethical considerations is not an afterthought but an integral part of developing and deploying AI responsibly. It requires a multidisciplinary approach involving AI researchers, ethicists, policymakers, and the public to ensure that AI serves humanity’s best interests.

Conclusion: Your Journey into AI Begins Now

This guide has provided you with a foundational understanding of Artificial Intelligence in 2025, covering its core definitions, historical evolution, essential terminology, and critical ethical considerations. You now have the basic knowledge to confidently engage with the broader AI landscape.

The world of AI is dynamic and full of possibilities. As you continue your journey, remember that continuous learning is key. The concepts discussed here are the building blocks upon which more advanced AI applications and innovations are constructed. Embrace the curiosity, explore further, and be part of shaping a future where AI empowers and benefits all.

Stay tuned for more in-depth explorations of specific AI model types, their practical applications, and advanced implementation strategies!