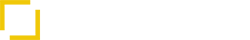

Introduction — The Cognitive Core of Agentic Intelligence

As artificial intelligence moves beyond prediction and generation, the next evolution lies in reasoning the ability for systems to think, evaluate, and decide like humans.

This shift is embodied in agentic reasoning, the foundation of autonomous cognition where AI agents interpret context, plan actions, execute tasks, and self-correct through reflection.

These structured thinking frameworks known as agentic reasoning patterns define how modern Agentic AI systems make sense of goals, uncertainty, and feedback.

In this guide, we’ll explore the four major reasoning patterns shaping this era:

ReAct, Reflexion, Plan-and-Execute, and Tree of Thoughts (ToT).

You’ll learn how each framework works, where to apply it, and how combining them leads to adaptive, trustworthy AI autonomy.

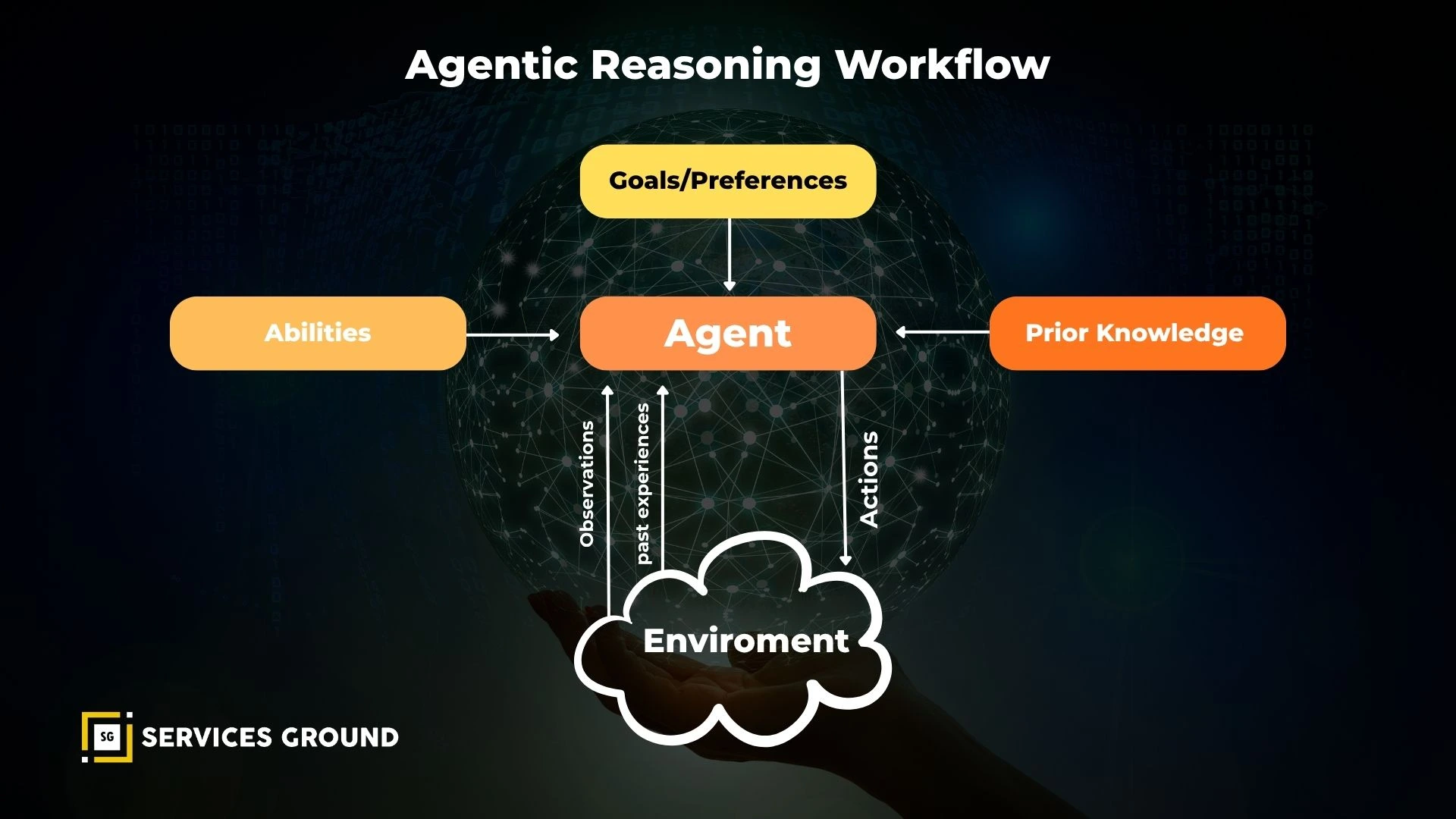

What Are Agentic Reasoning Patterns?

Agentic reasoning patterns are reusable cognitive frameworks that govern how an AI agent analyzes, decides, and adapts to achieve its goals.

Just as humans follow thought strategies, brainstorming, reflection, or planning agentic reasoning frameworks define how digital agents think within the Perception → Reasoning → Action → Reflection (PRAR) loop.

Each pattern introduces a unique “thinking rhythm.”

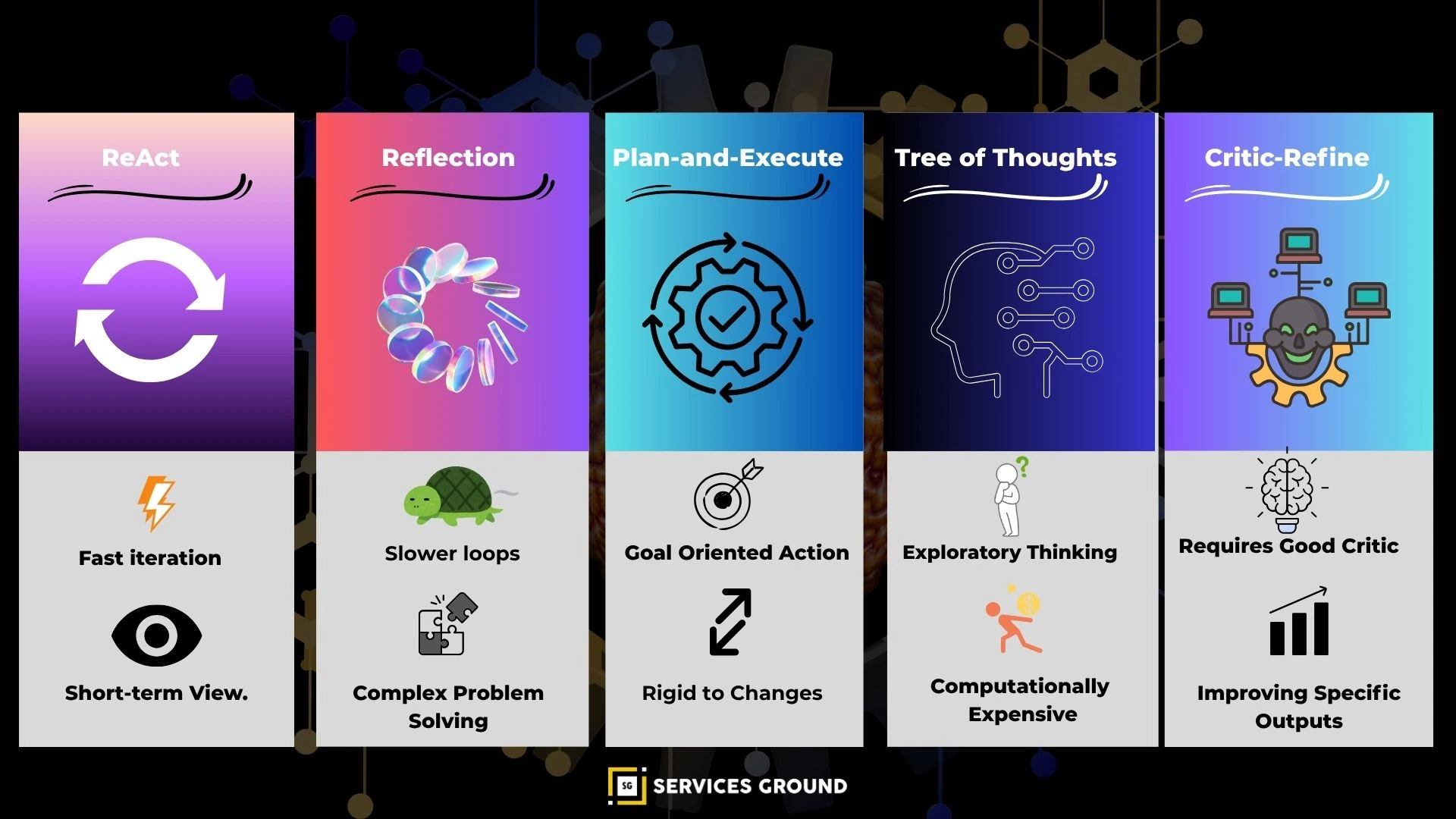

Some focus on fast iterative reasoning (like ReAct), others on introspection (Reflexion), structured strategy (Plan-and-Execute), or parallel exploration (Tree of Thoughts).

Why They Matter

- They enable goal-directed autonomy, not mere task execution.

- They make reasoning transparent and auditable.

They allow hybrid orchestration, where different agents specialize in different cognitive loops.

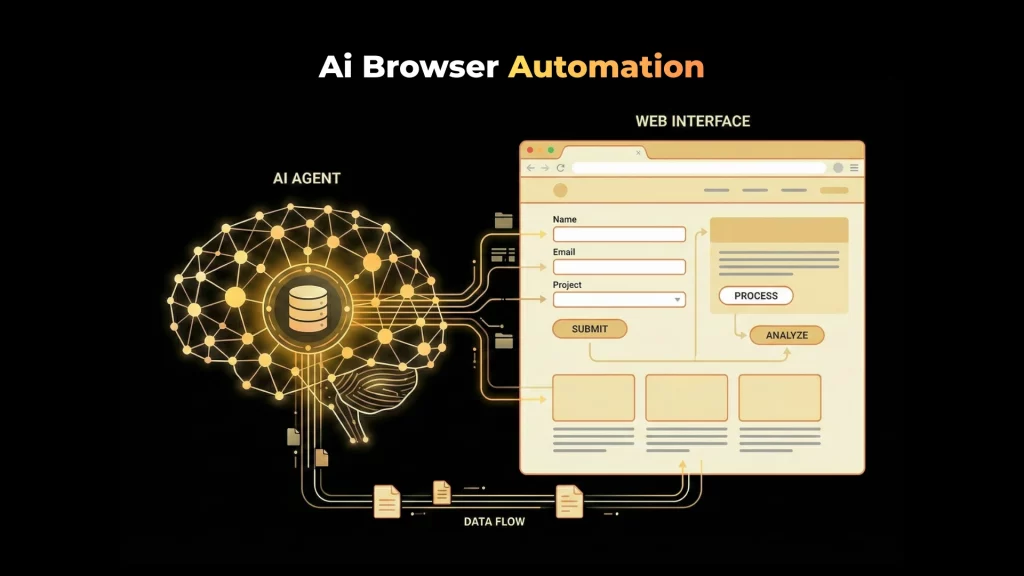

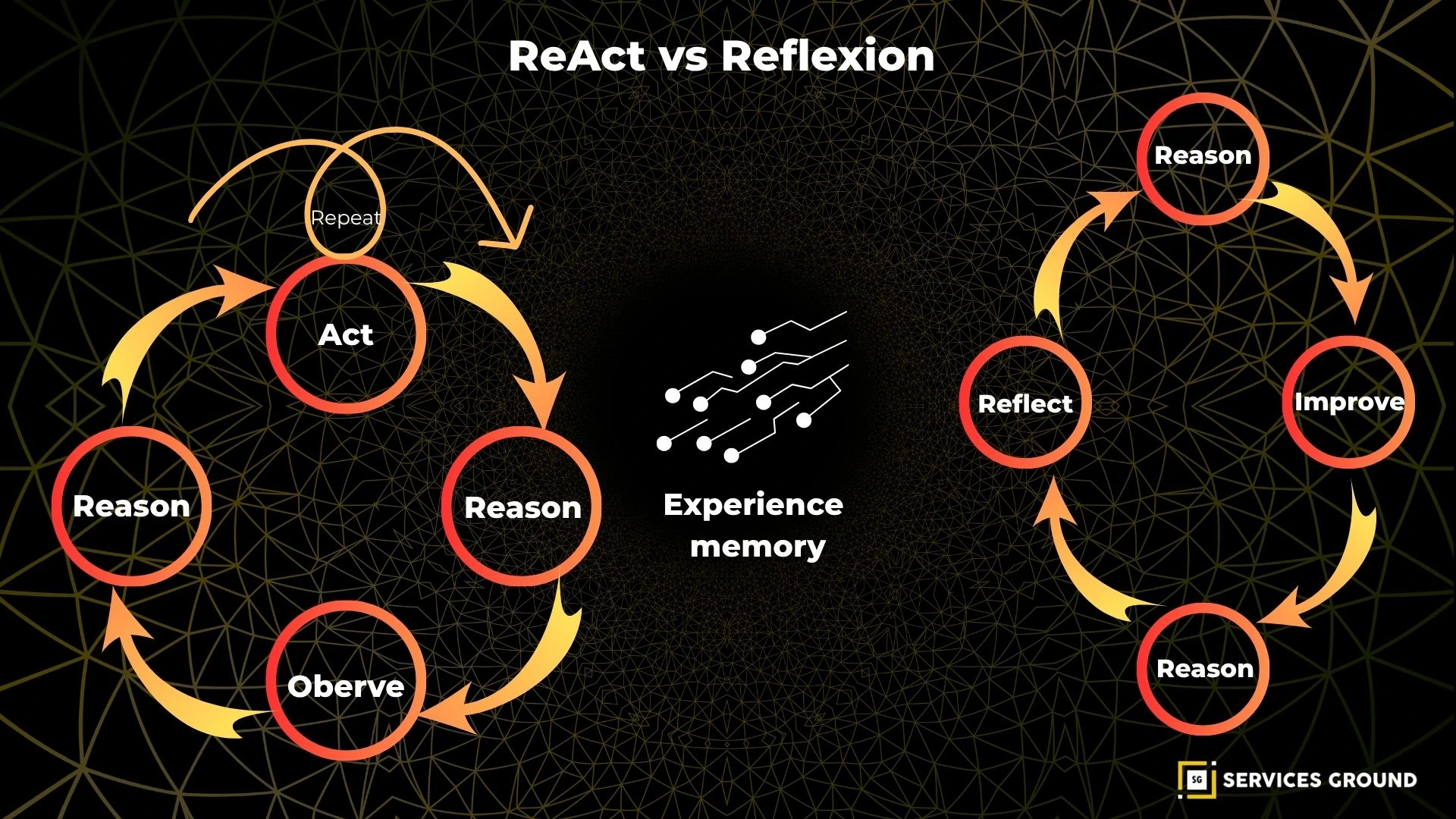

The ReAct Pattern — Thinking Through Action

Overview

ReAct (Reason + Act) is one of the earliest and most practical agentic reasoning patterns.

It alternates between two phases: reasoning and acting allowing agents to reason about their environment while interacting with it dynamically.

Instead of producing one long plan, a ReAct agent decides incrementally:

observe → reason → act → observe → reason again.

How It Works (Simplified Loop)

- Observe environment → retrieve input

- Reason about the next step → propose action

- Execute action → get result

- Reflect briefly → loop back to reasoning

This structure creates a continuous thought-action loop, perfect for interactive or tool-using agents.

Example

Suppose a ReAct agent is tasked with finding the most recent paper on “GraphRAG.”

- It searches online → reasons which source looks credible → opens the paper → extracts abstract → reasons again about next action (download, summarize, or cite).

- The reasoning is embedded inside the workflow not separated from it.

Strengths

- Real-time adaptation.

- Excellent for tool use, data retrieval, and live tasks.

- Integrates smoothly with frameworks like LangChain or Semantic Kernel.

Limitations

- Limited introspection; errors may propagate.

Requires strong short-term memory for context tracking.

The Reflexion Pattern — Self-Improving Reasoning

Concept

Reflexion extends ReAct by adding self-evaluation and memory-based refinement.

After each reasoning-action cycle, the agent critiques its output and stores insights for future reference forming a genuine learning loop.

It answers a key challenge in AI reasoning:

“How can an agent know when it made a mistake?”

How It Works

- Generate answer or perform task

- Critique outcome using feedback or scoring model

- Store insight or correction in memory

- Retry with improved reasoning

The Reflexion agent thus grows from experience developing a form of metacognition (thinking about its own thinking).

Example

In a coding assistant, Reflexion can detect that its output failed unit tests, then self-revise its reasoning path and attempt a corrected solution without human intervention.

Strengths

- Builds self-awareness into reasoning

- Enhances reliability and factual accuracy

- Reduces hallucination risk

Limitations

- Higher computational cost (extra reasoning passes)

- Needs structured memory (e.g., vector store or memory log)

Plan-and-Execute — Structured Sequential Reasoning

Overview

Plan-and-Execute is the architectural opposite of ReAct.

Instead of reasoning through every step, the agent plans the entire strategy first, then executes sequentially.

This approach mirrors human project management: define goals → outline subtasks → perform each one in order.

How It Works

- Create global plan with milestones

- Break into sub-goals or subtasks

- Execute each task sequentially

- Evaluate results at completion

Example

An autonomous research agent using Plan-and-Execute might:

- Plan → Identify five RAG methods to compare

- Execute → Fetch academic papers for each

- Summarize → Generate pros/cons matrix

- Report → Produce structured brief

Strengths

- Ideal for long-horizon tasks or multi-stage objectives

- Easier to monitor, audit, and reproduce

- Compatible with LangGraph or CrewAI orchestration

Limitations

- Inflexible when new data appears mid-execution

Less adaptive than ReAct or Reflexion

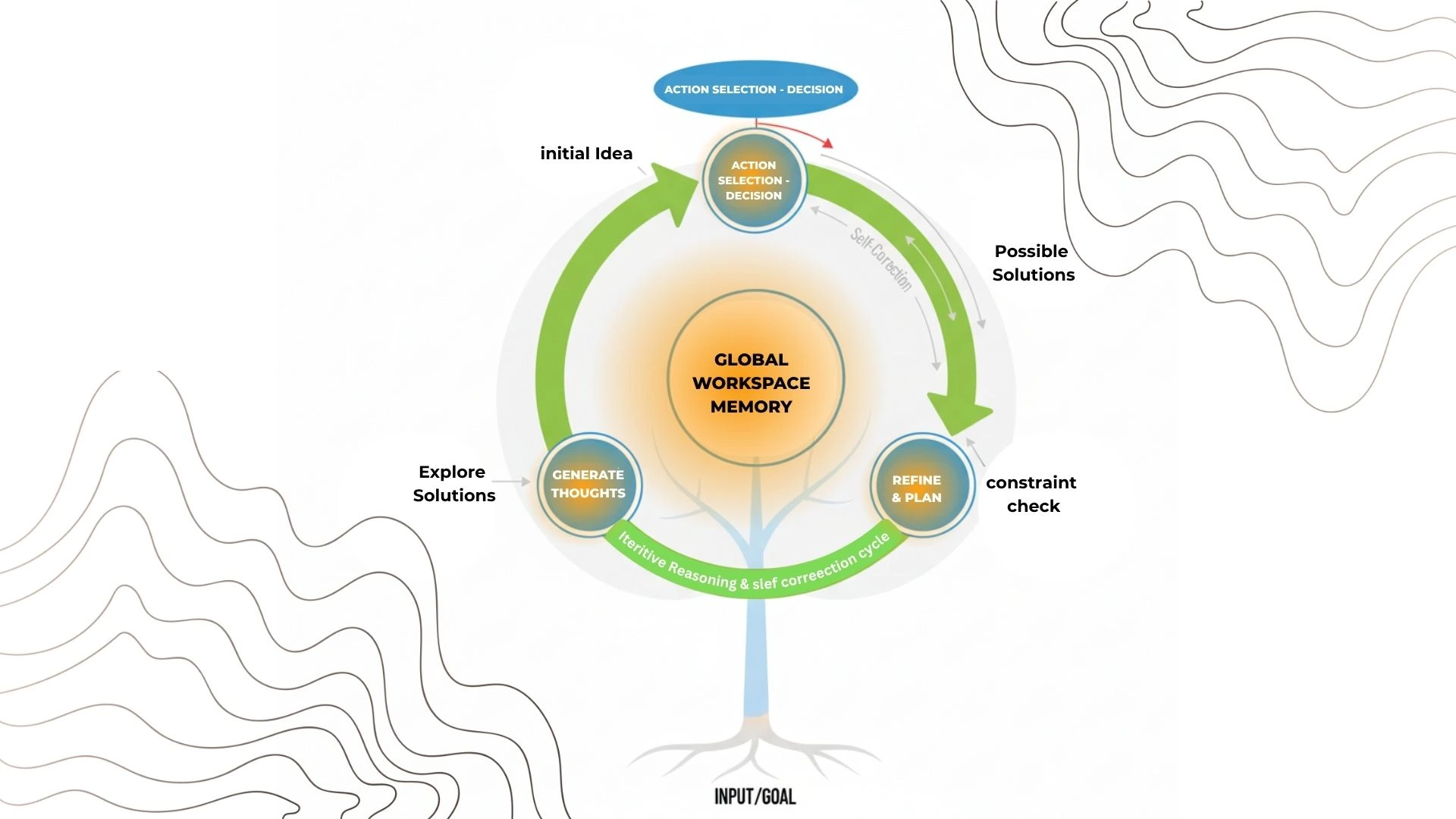

Tree of Thoughts (ToT) — Parallel Exploration of Ideas

Concept

Tree of Thoughts (ToT) is a reasoning framework that allows agents to explore multiple ideas or solution paths simultaneously, evaluate them, and converge on the best option.

Think of it as an AI “brainstorming tree” where each branch represents a possible thought or decision path.

How It Works

- Generate multiple candidate thoughts

- Score each branch using evaluation criteria

- Expand promising paths deeper

- Prune unproductive branches

- Select optimal reasoning path

Example

A ToT agent solving a math word problem might generate three possible reasoning paths, test them partially, and then continue only with the most logical route.

Strengths

- Enables divergent and creative reasoning

- Increases problem-solving accuracy

- Works well with hierarchical or ensemble systems

Limitations

- Expensive in computation

- Requires good scoring/evaluation functions

Self-Ask and Critic-Refine — Recursive Improvement Loops

The Self-Ask Technique

This pattern involves the agent asking itself clarifying sub-questions before answering similar to Socratic reasoning.

Example:

“What is the user asking?”

“What information do I need to answer this?”

“How do I verify it?”

This recursive questioning leads to more logical and transparent answers.

The Critic-Refine Loop

After producing an output, the Critic module reviews it and either approves or requests refinement.

This loop powers advanced hybrid frameworks (like Reflexion or Critic-Refine architectures).

Together, Self-Ask and Critic-Refine form the foundation for meta-reasoning the ability of agents to assess their own reasoning quality.

Comparing the Core Agentic Reasoning Patterns

| Framework | Structure | Strength | Limitation | Best Use |

|---|---|---|---|---|

| ReAct | Iterative think-act loop | Fast, tool-friendly, adaptive | Lacks reflection | Dynamic environments |

| Reflexion | Think-act-reflect loop | Self-improving accuracy | Higher compute cost | Long-term reasoning agents |

| Plan-and-Execute | Sequential plan-run structure | Scalable, auditable | Less flexible | Long workflows |

| Tree of Thoughts (ToT) | Parallel multi-path exploration | Creative, high-accuracy | Expensive | Problem solving, generation |

| Self-Ask / Critic-Refine | Recursive feedback | Interpretability | Slow iteration | Review, quality assurance |

These frameworks are not mutually exclusive.

Modern agentic reasoning systems often combine multiple patterns e.g., Reflexion + ReAct for adaptive reasoning, or Plan-Execute + ToT for long-term creative problem solving.

Hybrid and Emerging Reasoning Frameworks

The next generation of cognitive frameworks blends the best of all patterns:

- ReAct + Reflexion → ReAction models (action + reflection cycles)

- ToT + Plan-Execute → Hierarchical Tree-Planners

- GraphRAG → Uses retrieval graphs to connect reasoning nodes dynamically

Frameworks like LangGraph, CrewAI, and Semantic Kernel already operationalize these ideas turning reasoning into transparent, traceable workflows.

Challenges and Future Trends in Agentic Reasoning

Despite rapid progress, several open challenges remain:

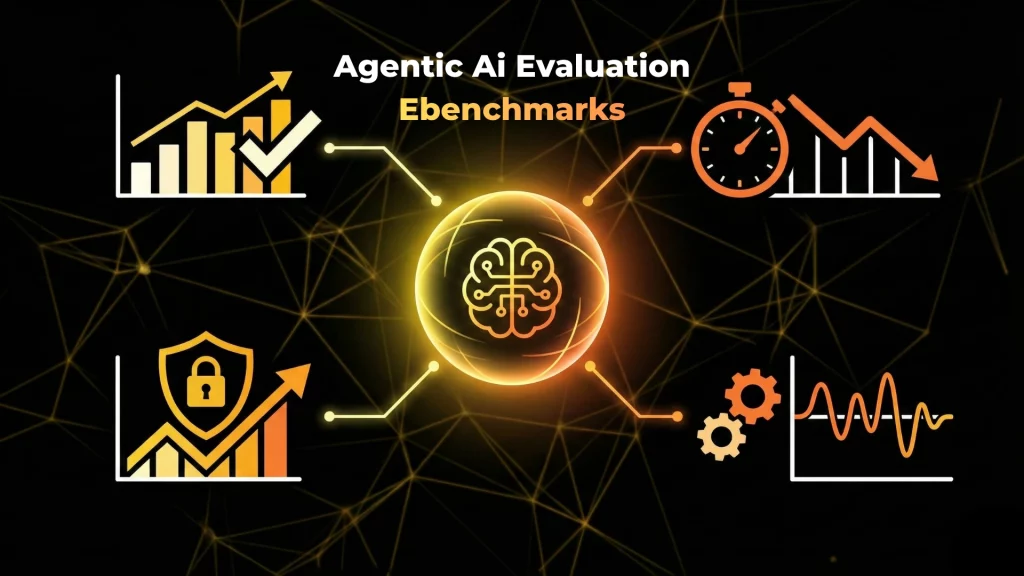

1. Evaluating Reasoning Quality

Benchmarks like AgentBench and SWE-Bench are emerging to quantify reasoning success and factual accuracy.

2. Balancing Cost and Cognitive Depth

More reasoning steps = higher token cost.

Future architectures aim to optimize for adaptive reasoning depth adjusting loops based on task difficulty.

3. Safety and Interpretability

Transparent reasoning logs and explainable intermediate thoughts are key to ethical and auditable AI systems.

4. Toward Adaptive Hybrid Cognition

The frontier lies in dynamic orchestration: agents that can switch between reasoning modes depending on context, reflective when uncertain, reactive when time-critical.

Why Agentic Reasoning Patterns Matter

The move from static LLMs to Agentic AI represents the rise of systems that don’t just answer what they think, plan, and learn.

Reasoning patterns are the mental blueprints that make this autonomy reliable and human-aligned.

By mastering these frameworks, developers and researchers gain the ability to build transparent, resilient, and goal-oriented AI systems, the true hallmark of intelligent design.

Conclusion — From Automation to True Autonomy

Agentic reasoning patterns are the cornerstone of modern autonomous systems.

They convert raw computational intelligence into structured cognition allowing agents to plan, adapt, and reflect just like humans.

The future of AI isn’t just faster models, it’s smarter reasoning.

By understanding and combining ReAct, Reflexion, Plan-and-Execute, and Tree of Thoughts, developers can build systems that think responsibly, act purposefully, and continuously evolve toward genuine autonomy.

You can also follow us on Facebook, Instagram, Twitter, Linkedin, or YouTube.

Frequently Asked Questions

Agentic reasoning is the process by which autonomous AI agents interpret, plan, act, and reflect to achieve goals without continuous human input.

They are structured cognitive frameworks (like ReAct, Reflexion, Plan-and-Execute, and Tree of Thoughts) that define how AI agents reason and learn.

ReAct alternates between reasoning and acting, while Reflexion adds self-critique and memory feedback, enabling learning from past mistakes.

Reactive, reflective, sequential (plan-based), parallel (tree-based), and recursive (critic-refine) reasoning patterns.

Yes. Modern frameworks blend them for example, Reflexion + ReAct improves adaptability, while ToT + Plan-Execute enhances creativity and structure.

They make reasoning transparent and auditable, ensuring AI actions align with human goals and ethical principles.