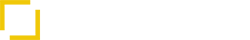

From Text Generation to Action — How Function Calling Powers Agentic AI

Modern AI has evolved beyond generating text; it now executes actions, makes API calls, retrieves structured data, and validates its own outputs.

This transformation is powered by three foundational innovations: tool use, function calling, and structured outputs.

Together, they give AI systems the ability not just to think, but to act safely, consistently, and intelligently.

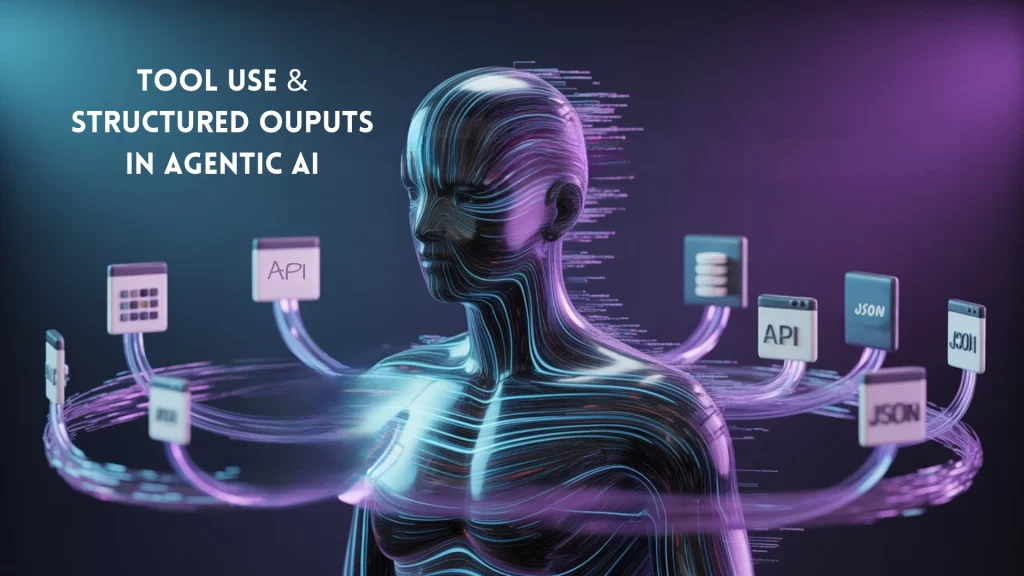

In this deep guide, you’ll learn how tool use works in agentic AI, how structured outputs ensure reliability, and how frameworks like OpenAI, LangChain, Gemini, and Semantic Kernel implement these systems with real-world examples and code.

The Problem — Why Unstructured AI Outputs Break Real Systems

Large language models (LLMs) are impressive at reasoning but they often return unpredictable, loosely formatted text.

When these outputs are passed to APIs, databases, or automation workflows, errors emerge:

| Problem | Impact |

|---|---|

| Malformed JSON | API integration fails |

| Missing fields | Validation breaks |

| Hallucinated values | Unsafe or inaccurate decisions |

| No standard format | Hard to automate downstream logic |

Example failure:

{

"name": "Meeting",

"date": "next Friday (I think?)",

"attendees": "Maybe John and Sarah"

}

Such responses might look human-friendly but are machine-unfriendly.

That’s why structured outputs and function calling became essential to convert free-form model reasoning into executable, verifiable, and standardized actions.

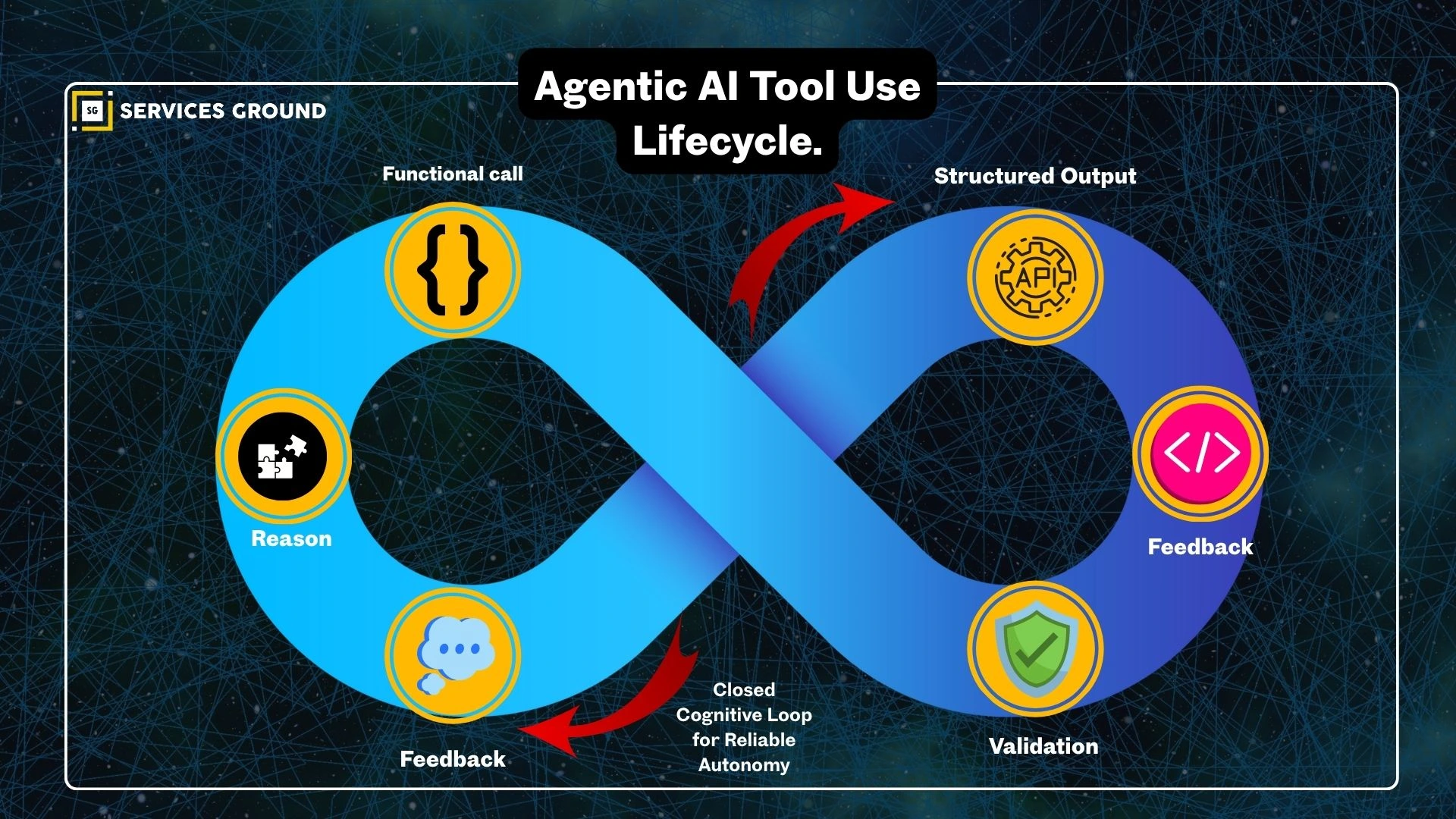

The Solution — Function Calling and Structured Outputs

Function calling lets models trigger external actions safely, while structured outputs ensure that every response conforms to a known schema.

In agentic AI systems, these two capabilities form the cognitive backbone of action:

Think → Call Tool → Receive Structured Output → Validate → Learn → Act Again

This loop closes the gap between intelligence and implementation.

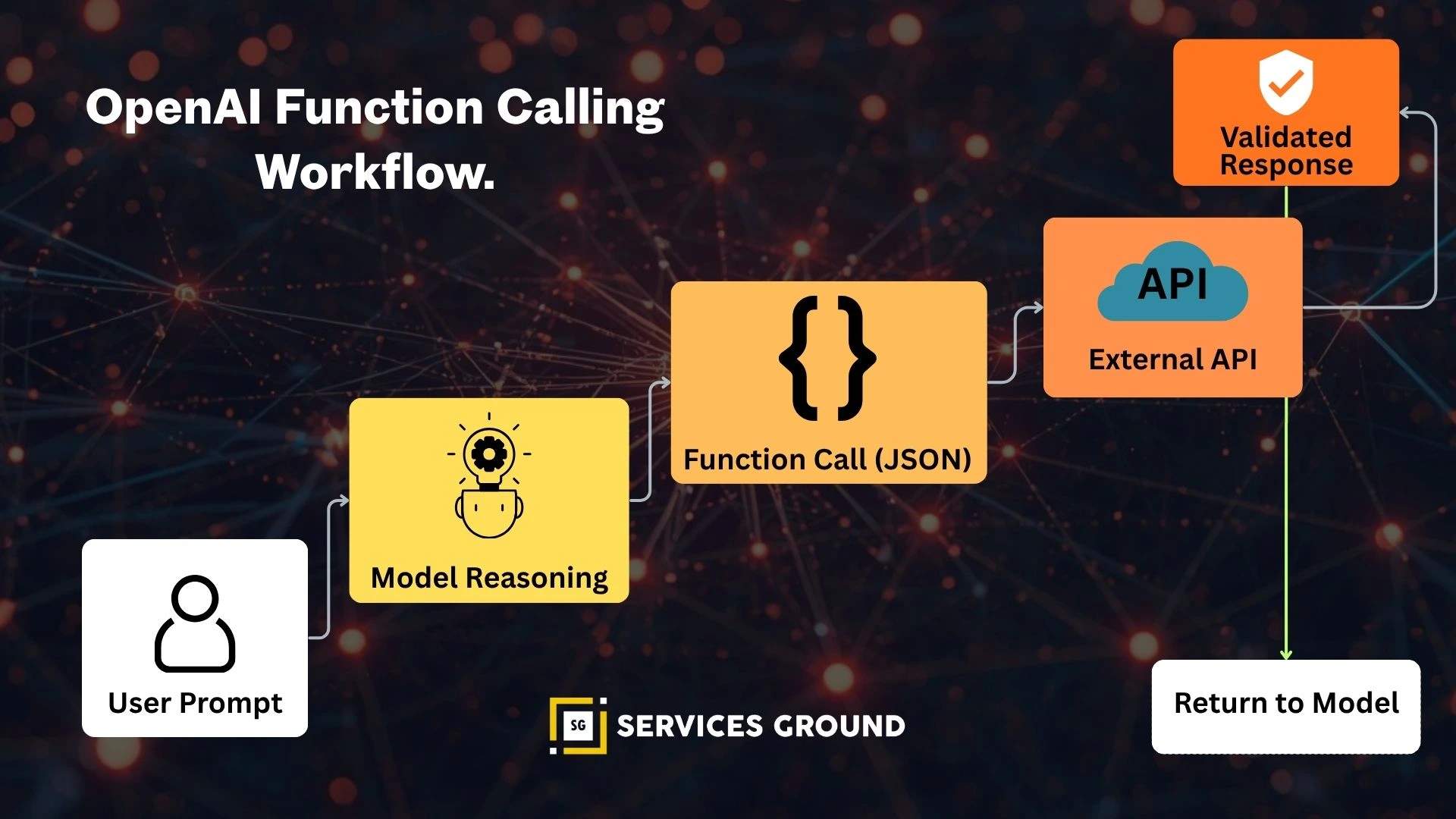

OpenAI Function Calling — The Foundation of Tool Use

OpenAI’s function calling allows models like GPT-4o to directly invoke developer-defined functions.

Each function is described in JSON Schema format, so the model knows exactly how to call it.

Real OpenAI Example

from openai import OpenAI

client = OpenAI()

def get_weather(location):

return {"location": location, "temperature": 22, "unit": "C"}

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get the current weather for a city",

"parameters": {

"type": "object",

"properties": {"location": {"type": "string"}},

"required": ["location"]

}}}]

response = client.responses.create(

model="gpt-4o",

messages=[{"role": "user", "content": "What's the weather in Paris?"}],

tools=tools,

)

print(response.output_text)

The model:

- Detects the intent (“weather”)

- Calls the appropriate tool (get_weather)

- Returns structured, valid JSON output

This workflow eliminates arbitrary code execution and ensures data consistency across tools.

Structured Outputs — Type Safety for AI Responses

Structured outputs enforce a contract between model and application.

Every response must match the defined schema making AI output parsing as safe as handling database records.

OpenAI Structured Output with Pydantic

from openai import OpenAI

from pydantic import BaseModel

client = OpenAI()

class CalendarEvent(BaseModel):

name: str

date: str

participants: list[str]

response = client.responses.parse(

model="gpt-4o",

input=[

{"role": "system", "content": "Extract calendar event details"},

{"role": "user", "content": "Alice and Bob are meeting on May 10 for strategy review."},

],

text_format=CalendarEvent,

)

print(response.output_parsed)

Output

{ "name": "Strategy Review", "date": "2025-05-10", "participants": ["Alice", "Bob"] }

Structured output = reliability + automation readiness.

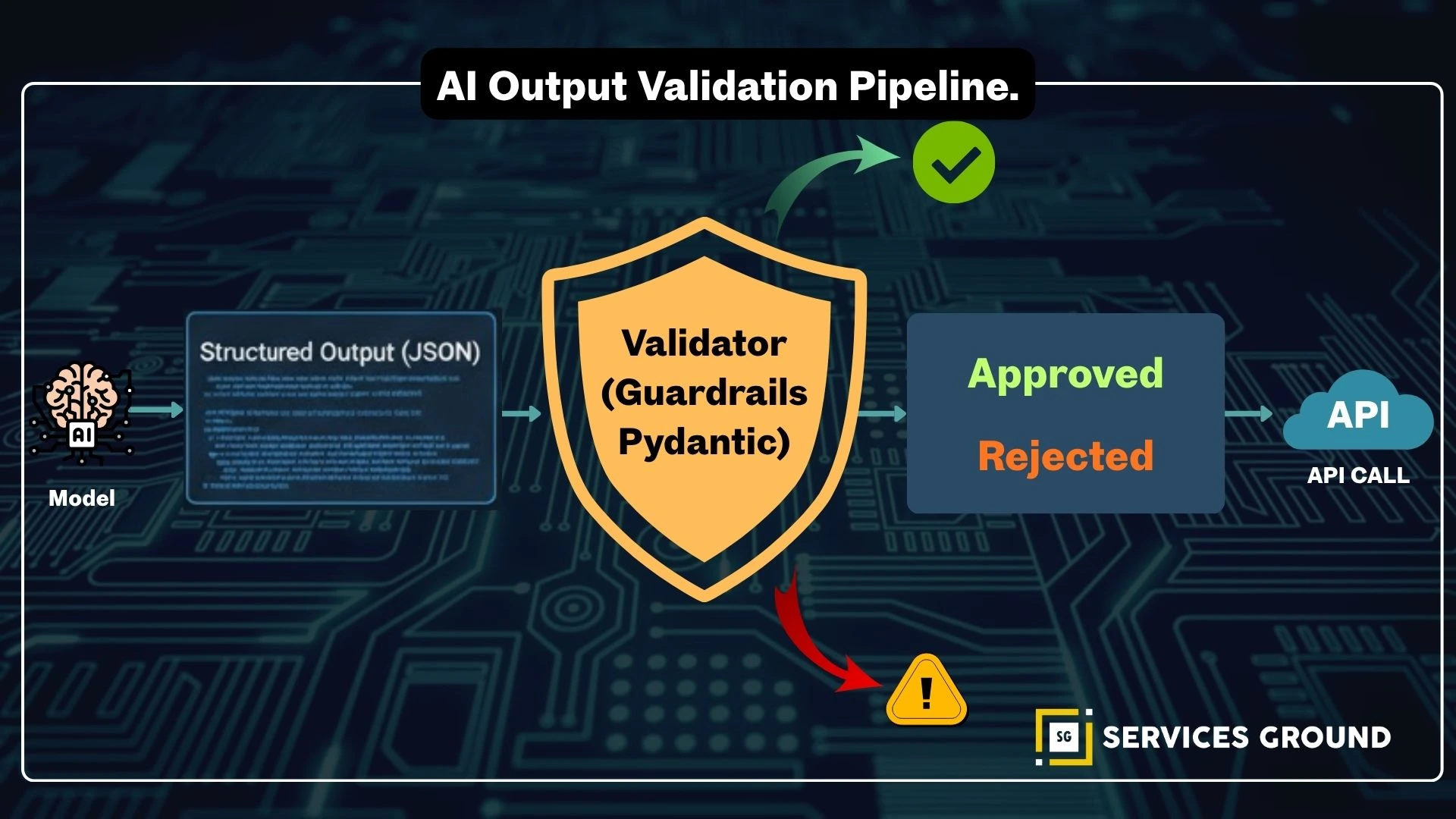

JSON Schema and AI Output Validation

Validation transforms structured output from “useful” to “trustworthy.”

A model might generate something valid in shape but wrong in meaning.

Frameworks like Pydantic, Guardrails-AI, and Zod ensure outputs follow the rules and business constraints.

Guardrails Example — Enforcing Allowed Values

from guardrails import Guard

guard = Guard.from_string("""

<rail version="0.1">

<output>

<string name="weather" format="enum" options="sunny, cloudy, rainy, snowy"/>

</output>

</rail>

""")

result = guard.parse("The weather is cloudy with light rain.")

print(result["weather"])

Output:

cloudy

If the model said “foggy,” the guardrail would block it.

This AI output validation is key to governance and compliance in enterprise systems.

LangChain Tools — Reasoning with Multi-Step Execution

LangChain abstracts function calling into a network of structured tools.

Each tool has its own schema and can be called dynamically by an LLM agent.

Example — Using Structured Tools

from langchain.tools import StructuredTool

from langchain.agents import initialize_agent

from langchain.chat_models import ChatOpenAI

def calculate_area(width: float, height: float) -> float:

return width * height

area_tool = StructuredTool.from_function(calculate_area)

llm = ChatOpenAI(model="gpt-4o")

agent = initialize_agent([area_tool], llm, agent_type="structured-chat-zero-shot-react-description")

result = agent.invoke("Find the area of a 5x8 rectangle.")

print(result)

LangChain automatically handles reasoning:

- Identifies intent

- Selects the right tool

- Parses and validates responses

It’s the “multi-tool brain” for Agentic AI systems.

Gemini Function Calling — Structured Intelligence by Design

Google’s Gemini API integrates function calling natively with well-typed parameters and responses.

This enables clear schema definitions and easier debugging.

Gemini Example

from google.generativeai import GenerativeModel

model = GenerativeModel("gemini-1.5-pro")

def get_exchange_rate(currency):

rates = {"USD": 1.0, "EUR": 0.93, "JPY": 150.1}

return {"currency": currency, "rate": rates.get(currency, "Unknown")}

tools = {

"functions": [{

"name": "get_exchange_rate",

"description": "Fetch the latest currency rate",

"parameters": {

"type": "object",

"properties": {"currency": {"type": "string"}},

"required": ["currency"]

}

}]

}

response = model.generate_content("What’s the exchange rate for EUR?", tools=tools)

print(response)

Gemini’s schema-first approach mirrors OpenAI’s but emphasizes data lineage and model transparency.

Semantic Kernel — Enterprise Tool Orchestration

Microsoft’s Semantic Kernel integrates function calling within its skills and planners architecture.

It’s designed for policy enforcement, traceability, and modular orchestration.

Example

import semantic_kernel as sk

from semantic_kernel.connectors.ai.open_ai import OpenAIChatCompletion

kernel = sk.Kernel()

kernel.add_chat_service("openai", OpenAIChatCompletion("gpt-4o"))

@kernel.function

def summarize_text(text: str) -> str:

return text[:80] + "..."

result = kernel.invoke("summarize_text", text="Agentic AI links reasoning, planning, and validation in a closed loop.")

print(result)

Semantic Kernel shines in regulated environments where AI safety, reproducibility, and governance are non-negotiable.

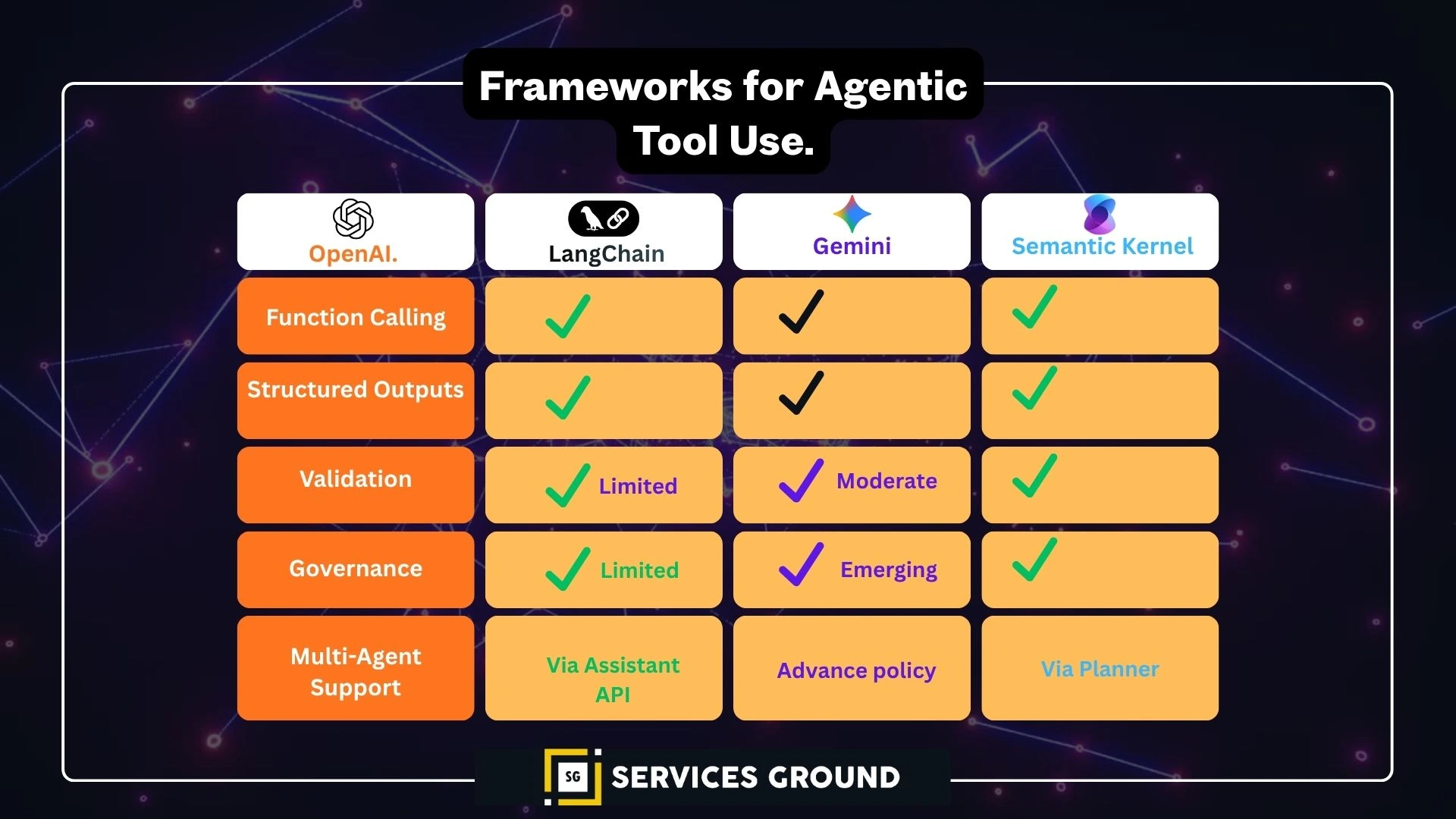

From Chaos to Control — Before and After Structured Outputs

| Before Structured Outputs | After Structured Outputs |

|---|---|

| Free-form text | JSON Schema-validated response |

| Brittle parsing | Deterministic field access |

| No type safety | Automatic validation |

| Human-only readable | API and machine-ready |

| Frequent errors | Traceable, compliant output |

This transition marks the difference between AI prototypes and production-grade Agentic AI.

Real-World Use Case — AI Calendar Assistant

An end-to-end agent using OpenAI Structured Output + LangChain Tool:

- User says: “Schedule a meeting with Jane and Mark tomorrow at 2 PM.”

- Model parses event details → CalendarEvent schema

- LangChain tool calls Google Calendar API

- Guardrails validation confirms valid date and participants

Confirmation sent to user

Result: Fully automated, validated, and logged workflow human-safe and production-ready.

Best Practices for Reliable Agentic Tool Use

- Define clear schemas for all tool inputs and outputs.

- Validate everything — even successful outputs can drift.

- Implement refusal logic (handle unsafe prompts).

- Add structured logging to track each call and result.

- Combine reasoning + execution (LLM + function orchestration).

Version your schemas — maintain backward compatibility.

Conclusion — The Infrastructure of Reliable Autonomy

Tool use, structured outputs, and validation are not isolated features; they are the operating system of Agentic AI.

They enable models to reason, act, and verify outcomes in real time.

By adopting frameworks like OpenAI, LangChain, Gemini, and Semantic Kernel with schema-driven validation, developers can build safe, transparent, and scalable autonomous systems.

The future of AI is not just generative, it’s governed, structured, and agentic.

Frequently Asked Questions

Tool use is how an AI agent performs external actions like API calls through structured function definitions.

Structured outputs enforce predefined schemas so that model responses are machine-readable and safe.

It ensures responses match required formats, improving reliability and preventing data errors or unsafe behavior.

OpenAI, LangChain, Gemini, and Semantic Kernel all provide schema-based or tool-driven structured responses.

Tighter integration of reasoning, validation, and execution creating autonomous, trustworthy AI ecosystems.