Introduction — The Missing Link in Agentic AI

Artificial Intelligence has evolved from isolated models into interconnected ecosystems.

Agentic AI isn’t just about reasoning, it’s about cooperation. For AI agents to collaborate effectively, they must share context, memory, and intent across tools, models, and environments.

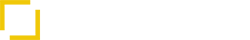

That’s where the Model Context Protocol (MCP) comes in.

MCP provides a universal way for models, servers, and clients to exchange structured context, ensuring interoperability and consistent understanding between AI systems.

In this article, we’ll break down what MCP is, how it works, and why it’s becoming the backbone of modern multi-agent intelligence.

What Is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an open communication standard that defines how AI models, clients, and tools exchange contextual data.

It’s like a “shared language” that ensures a model knows what it’s talking about, who it’s talking to, and why across multiple interactions.

Example:

Think of MCP as the HTTP of AI. Instead of delivering web pages, it delivers structured context between agents, tools, and memory stores.

By maintaining a consistent format for requests, responses, and updates, MCP helps AI systems operate cohesively regardless of their framework or platform.

Why MCP Matters for Agentic AI

Without MCP, every model instance lives in isolation.

A reasoning agent can plan, but forgets previous actions. A tool can execute, but lacks context about user intent. MCP solves this by providing a shared memory layer connecting reasoning, planning, and execution into a single contextual thread.

Key Advantages:

- Persistent Context: Memory that carries across sessions.

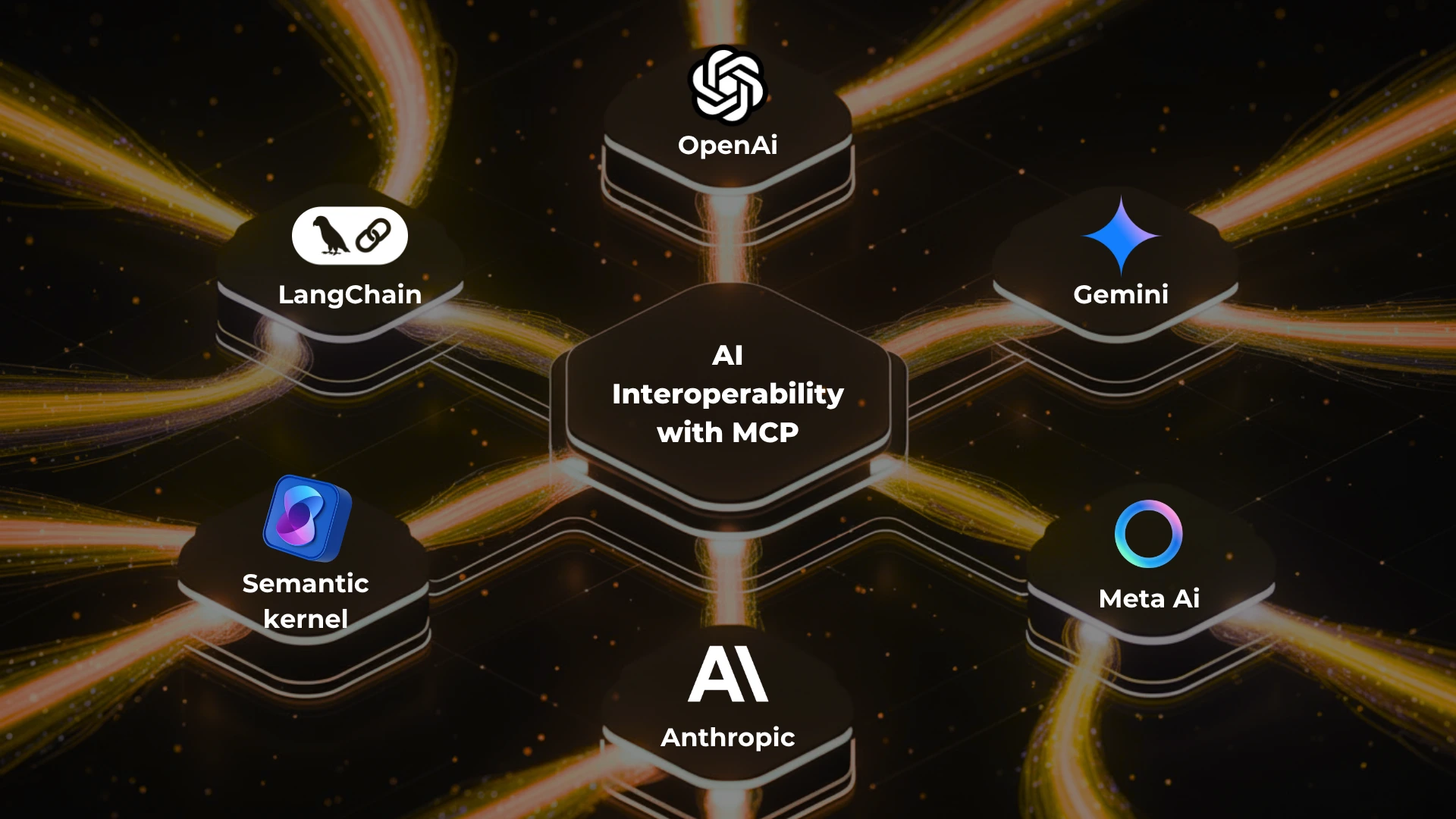

- Shared Understanding: Common data schema between LLMs, SDKs, and APIs.

- Interoperability: Seamless connection between different AI providers (OpenAI, Anthropic, Gemini, etc.).

Governance and Traceability: Every contextual update is versioned and logged.

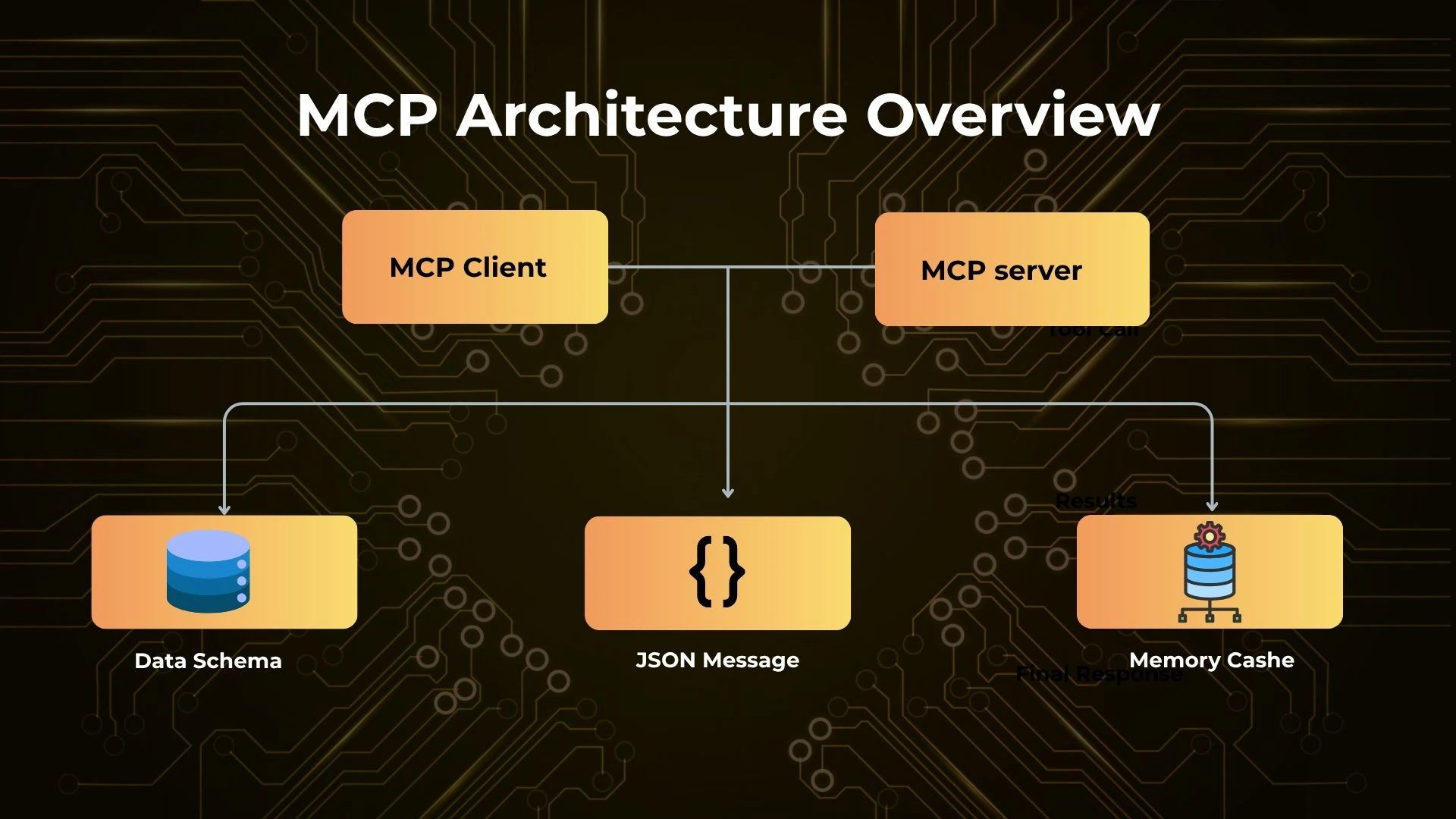

How the Model Context Protocol Works

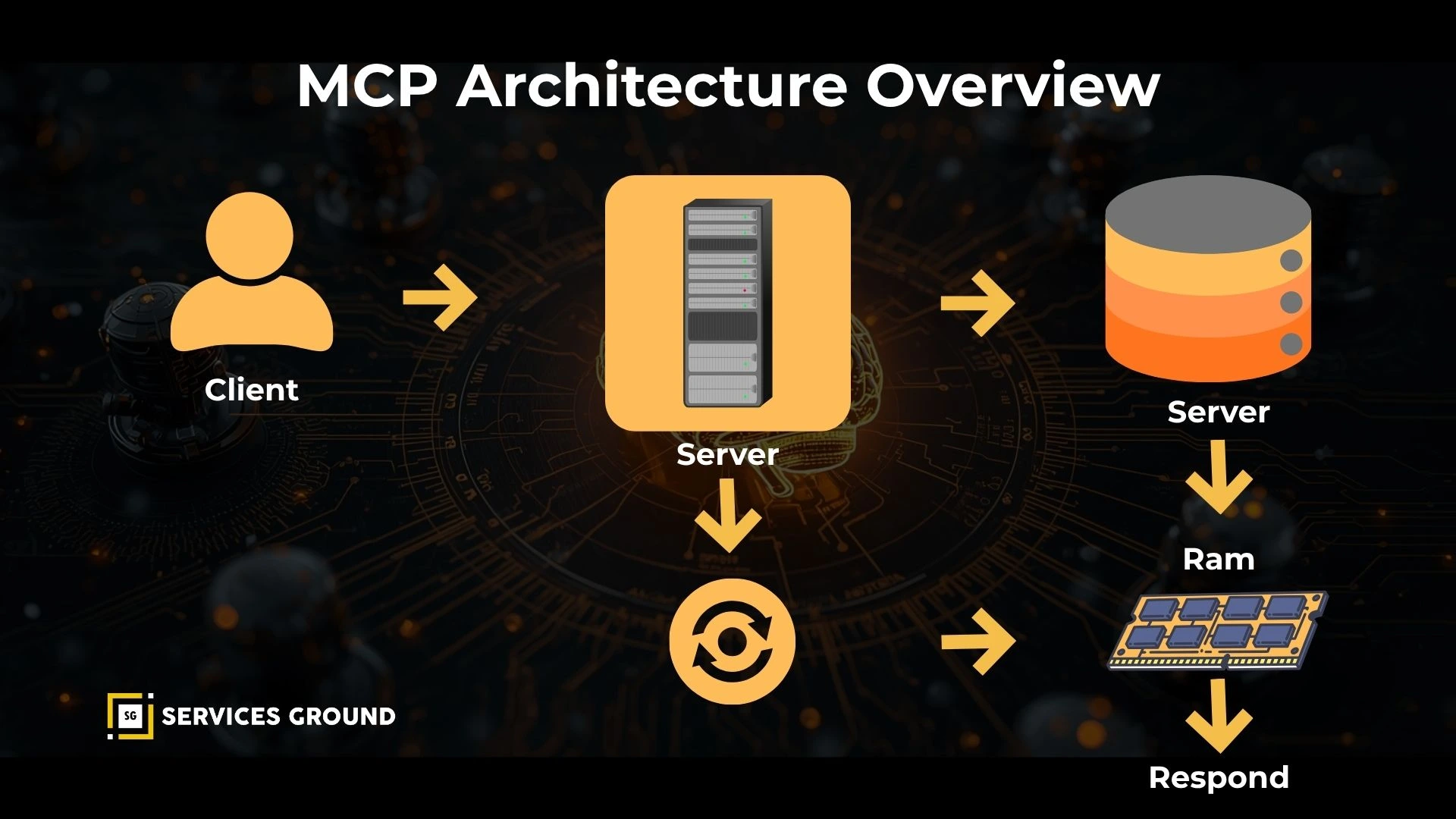

MCP organizes communication between clients, servers, and models through structured JSON messages and context layers.

The Core Components

| Component | Role | Description |

|---|---|---|

| MCP Server | Context manager | Stores and retrieves shared context between models and users |

| MCP Client | Bridge | Connects models and MCP servers, sending requests and receiving structured data |

| Transport Layer | Messaging route | Manages streaming or API communication (WebSockets / HTTP) |

| Schema Definitions | Ruleset | Ensures every exchange follows an agreed JSON structure |

Example Communication Flow

- The client connects to an MCP server.

- A model requests context for the current user/session.

- The server returns structured JSON context.

- The model generates a response using that context.

- The updated session is logged for reuse.

{ "type": "context_request", "session_id": "sg-2025-004", "requested_fields": ["user_intent", "previous_actions", "tools_used"] }

MCP Servers — The Context Backbone

The MCP server is the heart of interoperability. It maintains the state of every session from user goals to tool responses and ensures that all connected clients have access to consistent information.

Example (Python-Style Pseudo-Implementation)

from mcp import Server, ContextStore server = Server() @server.route("/context/update") def update_context(data): ContextStore.save(data["session_id"], data["content"])

This allows agents to store, retrieve, and update memory while maintaining continuity across different sessions and environments.

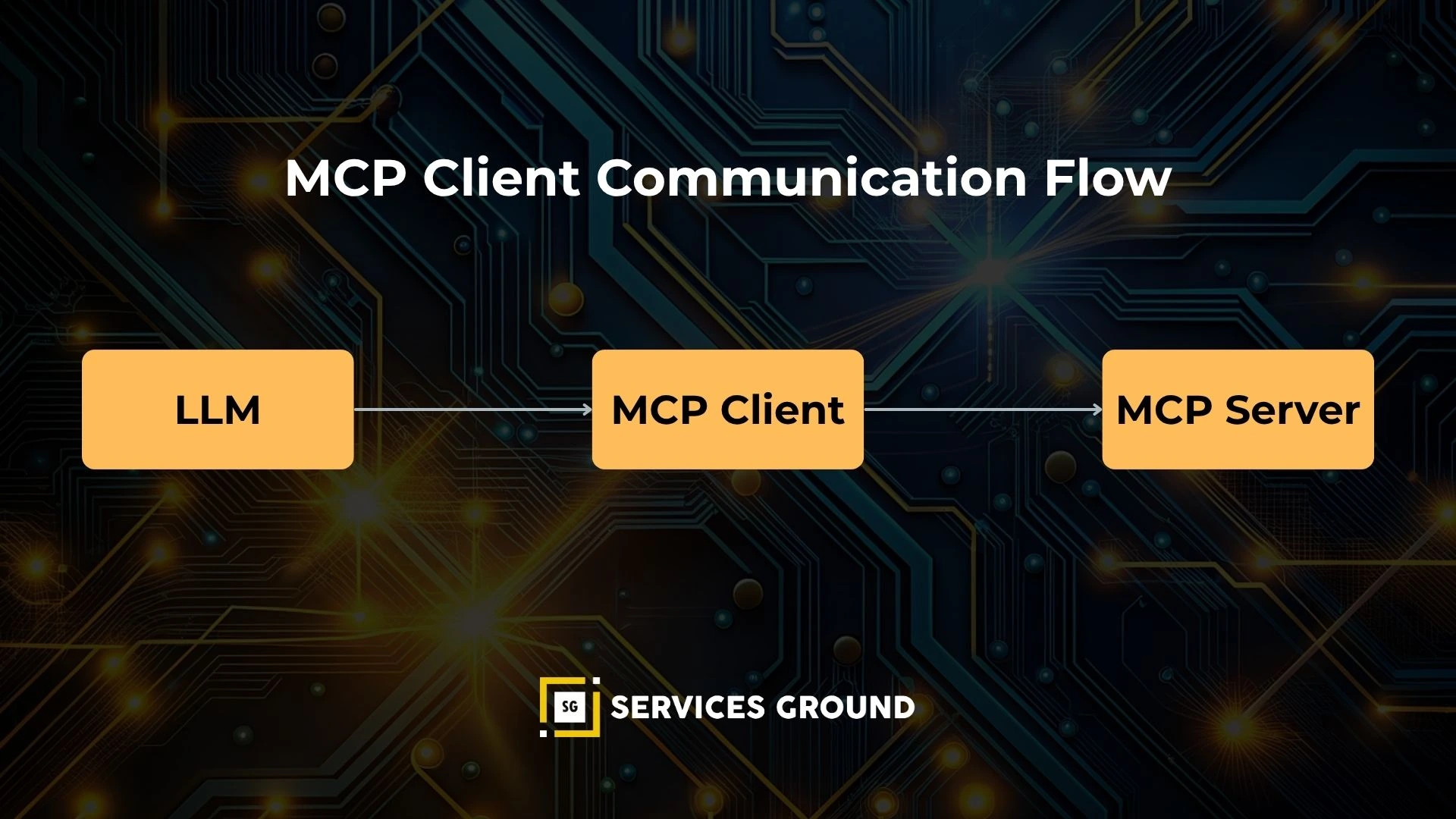

MCP Clients — The Model-Side Interface

An MCP client acts as the model’s gateway to shared context. It can request, send, or synchronize data with servers enabling models to stay “aware” of their environment.

Example — Connecting a Client to an MCP Server

from mcp import Client client = Client("wss://context.server.io") context = client.fetch_context("session_001") print(context["recent_actions"])

Real-World Integrations:

- OpenAI MCP Client: Extends GPT-4 and GPT-5 context across tools.

- Anthropic MCP Client: For Claude’s session persistence.

VSCode / Cursor: Use MCP clients to sync developer context between files and chatbots.

MCP SDK & Integration with Frameworks

Developers can extend MCP through SDKs — lightweight libraries that simplify client–server setup and schema validation.

SDKs also allow direct interoperability with frameworks like LangChain, Semantic Kernel, and Gemini.

Example

from mcp_sdk import MCPConnector from langchain import Agent connector = MCPConnector("https://mcp-server.company/api") agent = Agent(context_provider=connector) agent.run("Summarize recent user interactions.")

This bridges the gap between context sharing and real-time agentic reasoning.

MCP vs SDK vs API — Understanding the Difference

| Feature | MCP (Protocol) | SDK | API |

|---|---|---|---|

| Purpose | Defines message and context standard | Developer library to connect systems | Endpoint for data requests |

| Scope | Cross-tool and multi-model | Framework-specific | Application-specific |

| Example | OpenAI MCP Client | LangChain SDK | OpenAI REST API |

MCP doesn’t replace APIs; it coordinates them.

Context Windows and AI Interoperability

Context windows define how much memory an AI model can hold at once.

MCP extends this by enabling context continuity beyond the model’s native window size allowing long-term session awareness and persistent memory.

Example: GPT-4o may have a 200K token context limit, but MCP enables structured recall from previous sessions stored in an external server.

Real-World Use Cases

- Multi-Agent Collaboration: Different AI models sharing unified goals and state.

- Persistent Conversations: Long-term chat memory without re-prompting.

- Enterprise Compliance: Auditable logs of AI–user interactions.

Developer Tools: VSCode or Cursor sharing context via MCP clients.

Benefits and Challenges

Benefits

- Seamless interoperability

- Centralized context management

- Consistent schema validation

- Reduced token redundancy

- Vendor-neutral standardization

Challenges

- Versioning across frameworks

- Security of shared context

Standard adoption across vendors

The Future of MCP and AI Interoperability

MCP is poised to become the backbone of interoperable, multi-model intelligence.

As frameworks like OpenAI MCP, Anthropic MCP, and LlamaIndex connectors mature, developers will rely on these standards to unify reasoning, planning, and context.

Just as HTTP standardized the web, MCP is standardizing how AI systems think and communicate together.

Conclusion — Building the Backbone of Interoperable AI

The Model Context Protocol is more than a specification, it’s the infrastructure of collective intelligence.

By connecting reasoning models, memory servers, and developer tools through a single standard, MCP transforms isolated LLMs into coordinated agent networks.

For developers building the next generation of autonomous, context-aware AI systems, MCP isn’t optional, it’s essential.

Frequently Asked Questions

It’s a communication standard that defines how AI systems exchange context and data in a structured, interoperable format.

It stores and manages contextual data for sessions, ensuring that multiple agents and clients share consistent memory.

A client connects models to the MCP server, fetching and updating context as the conversation or task evolves.

MCP defines the standard; SDKs implement it in code, making it easier for developers to integrate.

It defines the size and persistence of memory that models can access and extend across sessions.