Introduction — Why MCP Matters in the Era of Agentic AI

As AI agents become more autonomous, they need a way to share context, resources, and tools across systems.

The Model Context Protocol (MCP) was designed to solve this exact challenge — it allows AI clients, servers, and models to communicate in a standardized, schema-driven way.

MCP turns fragmented integrations into a governed ecosystem where reasoning, data, and execution coexist safely.

Instead of every agent inventing its own API logic, MCP defines how models exchange structured context and invoke external tools without ambiguity.

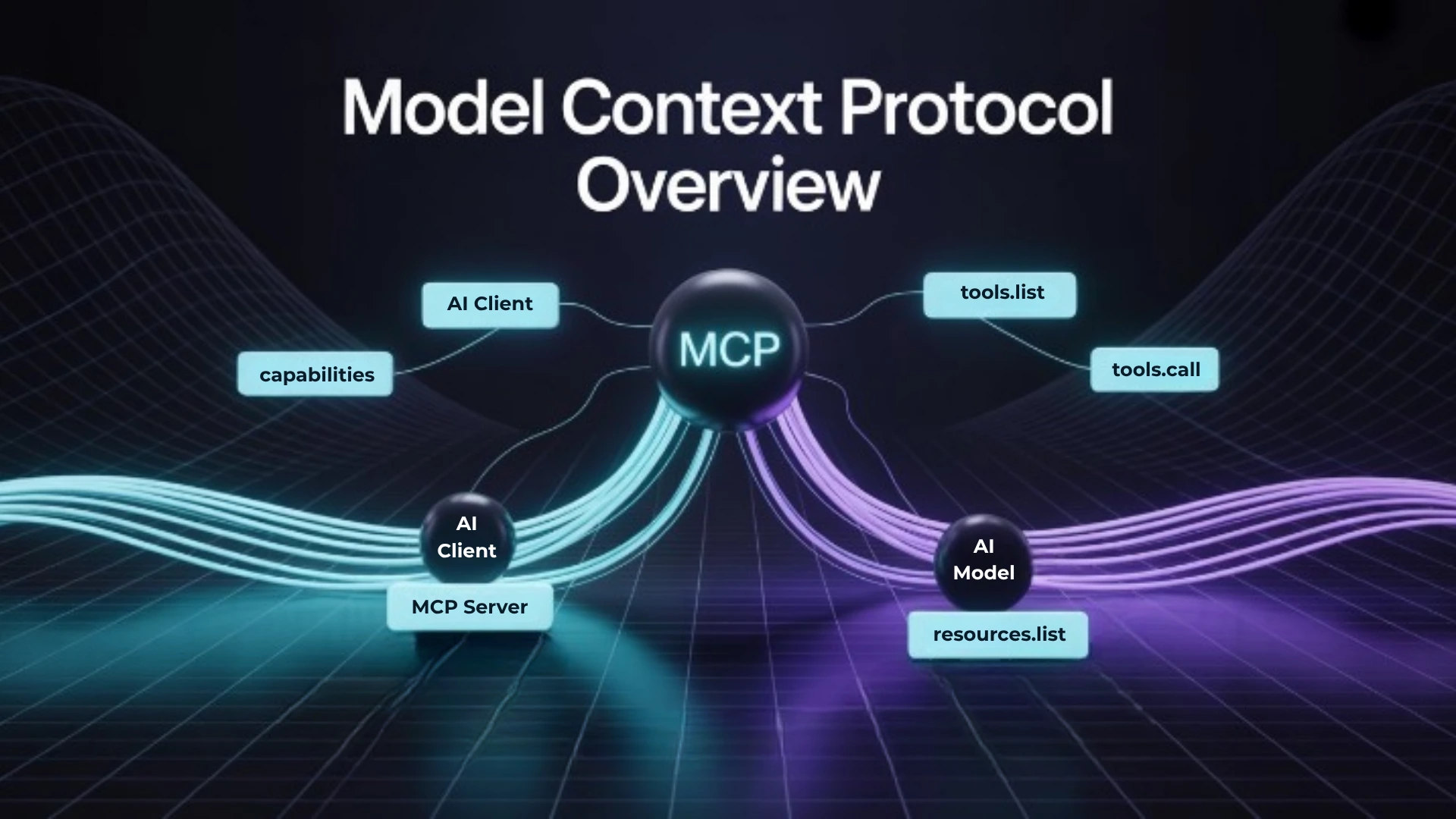

What Is Model Context Protocol (MCP)?

MCP is an open interoperability standard that defines how AI clients and servers share:

- Capabilities — what tools or resources are available

- Schemas — the expected structure for inputs and outputs

- Messages — how actions and responses are communicated

- Context — memory, metadata, and security constraints

It works as a universal handshake between models and external systems.

With MCP, an AI agent can:

- Discover and call tools it has never seen before

- Fetch structured knowledge safely

- Stream results and feedback through a common protocol

MCP is to agents what HTTP was to browsers — the connective tissue for intelligent interoperability.

MCP vs SDK — The Interoperability Mindset

An SDK is a toolkit for interacting with one specific service.

MCP, on the other hand, defines a universal language for any service to be discoverable, callable, and verifiable by any AI client.

| Aspect | SDK | MCP |

|---|---|---|

| Purpose | Bind to a specific API | Enable universal AI interop |

| Schema | Defined per library | JSON Schema (universal) |

| Usage | Developers write calls | Agents discover and call dynamically |

| Validation | Manual | Automated with schema |

| Governance | Application-level | Protocol-level |

In short — SDKs implement capabilities, MCP connects them.

That distinction makes MCP the cornerstone for AI-to-AI and AI-to-system communication.

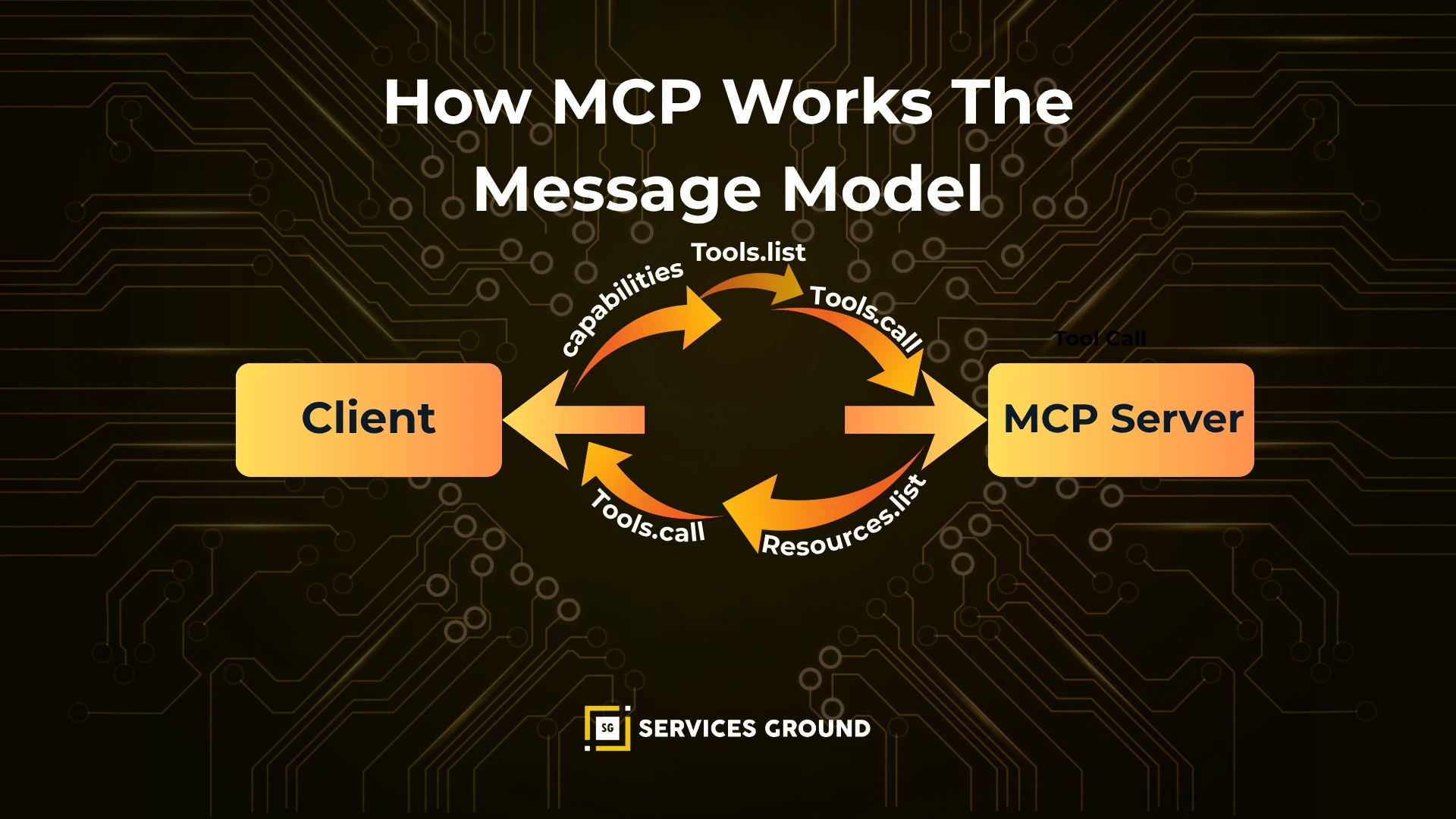

How MCP Works — The Message Model

Every MCP implementation revolves around a few standard messages:

- capabilities → what tools and resources exist

- tools.list → enumerates callable functions

- tools.call → executes a tool with structured input

- resources.list → provides resource metadata

- events / telemetry → traces execution and errors

Example Tool Descriptor

{ "name": "read_file", "description": "Read a UTF-8 file from workspace", "parameters": { "type": "object", "properties": { "path": { "type": "string", "pattern": "^/workspace/" } }, "required": ["path"] }}

This JSON defines an executable tool that any compliant MCP client can call — no special SDK needed.

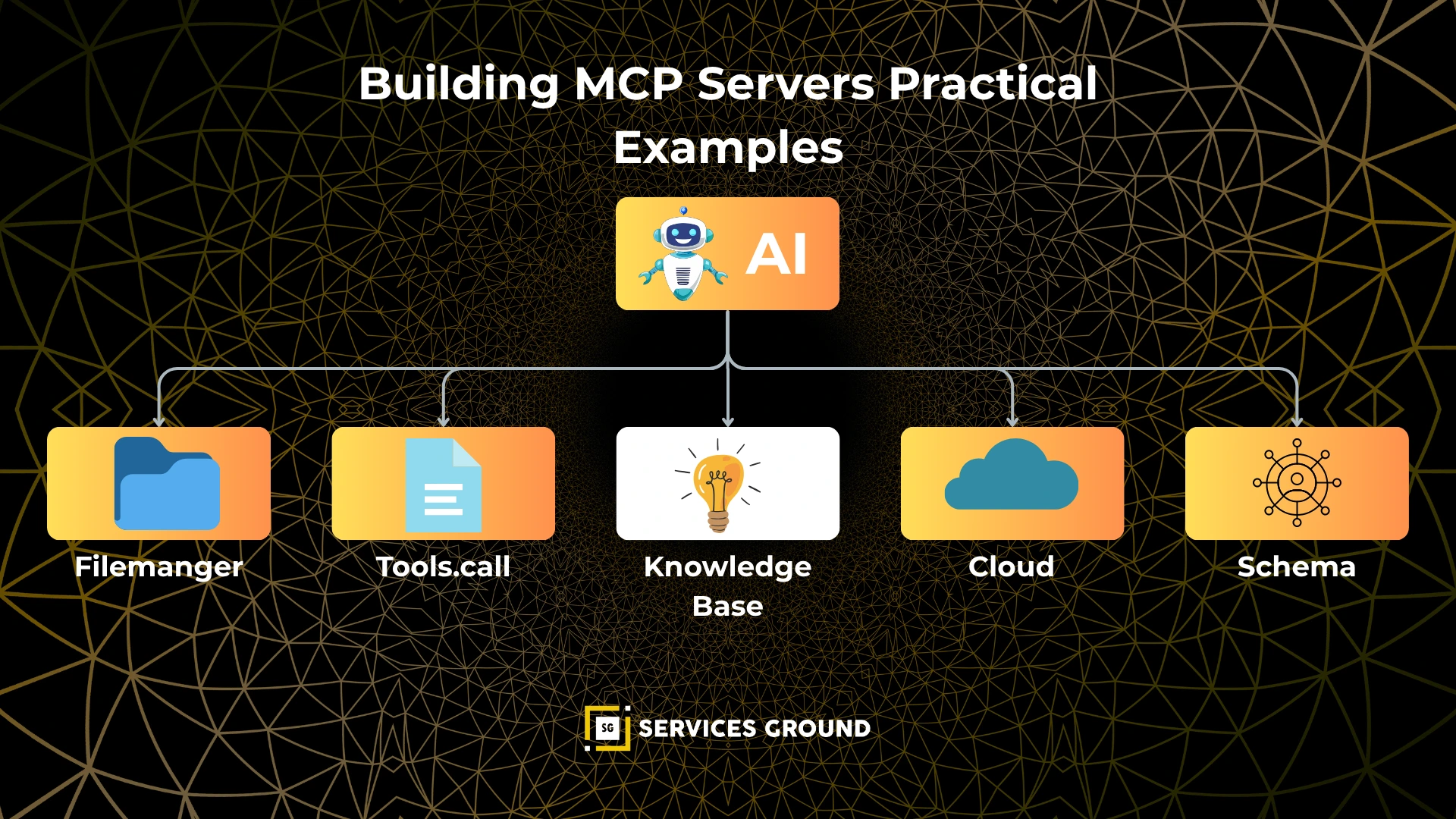

Building MCP Servers — Practical Examples

Each MCP server exposes a defined set of tools and schemas for client use.

Let’s explore how to create real-world servers across different domains.

1) Filesystem Server

Exposes read-only file operations for agents.

const tools = [ { name: "read_file", description: "Read a UTF-8 file", parameters: { type: "object", properties: { path: { type: "string" } }, required: ["path"] } }];

Good for code assistants, config inspectors, or system auditors.

2) Git Server

Lets an agent run git_status, git_diff, or even controlled git_commit operations with strict validation.

Useful for autonomous pull request bots or AI code reviewers.

3) Web Fetch Server

Allows safe, policy-controlled HTTP access:

{ "name": "http_fetch", "description": "GET a URL within allowlist" }

Restrict domains and content sizes — ideal for context retrieval in agentic reasoning.

4) Knowledge Base Server

Performs vectorized searches through FAISS or Pinecone indexes for relevant documents.

Used for grounded AI answers in customer support or enterprise knowledge systems.

5) Cloud Storage Server

Connects agents to cloud objects with signed URLs or metadata queries — read-only, audited, and secure.

MCP Clients — Discovering and Calling Tools

Clients are the consumers of these MCP services.

They:

- Call /mcp/capabilities to list available tools

- Parse each tool’s JSON schema

- Send structured requests to /mcp/tools.call

- Log responses for observability

Because the protocol is standardized, a single client can interact with any compliant MCP server.

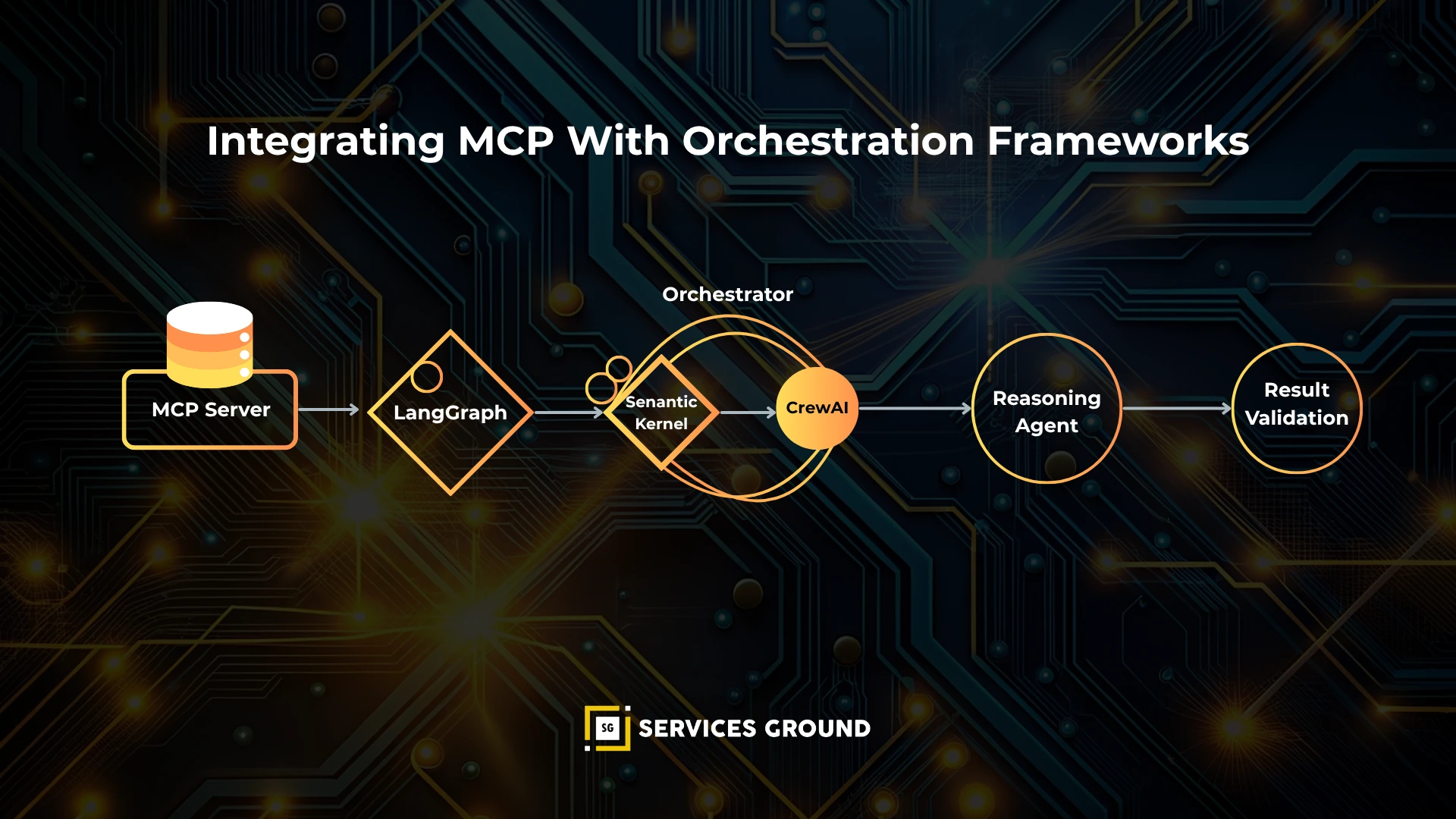

Integrating MCP With Orchestration Frameworks

H3 LangGraph

Wrap MCP calls as graph nodes.

Each node represents one server’s tool, connected via reasoning edges — enabling multi-step orchestration.

H3 Semantic Kernel

Map MCP tools to skills.

Apply policy filters and governance hooks for enterprise-grade safety.

H3 CrewAI & Multi-Agent Systems

Assign roles to agents (e.g., Researcher ↔ web-fetch, Engineer ↔ Git, Librarian ↔ KB).

Use shared memory for context passing and chain-of-thought auditing.

Security and Governance in MCP

MCP’s strength lies in its standardized security model.

Follow these practices for production deployments:

- Use JWTs or mTLS between clients and servers.

- Enforce path and domain allowlists.

- Apply schema-level validation (Ajv, Pydantic, or Zod).

- Add rate limits and data caps.

- Log everything — including args, duration, and return codes.

Require human-in-loop approvals for critical actions (e.g., commits, deletes).

Troubleshooting & Versioning Best Practices

| Issue | Likely Cause | Fix |

| Tool not visible | Schema error | Revalidate /capabilities response |

| Permission denied | Bad allowlist | Adjust resource scopes |

| Output mismatch | Schema drift | Version your tool descriptors |

| Latency spikes | Large payloads | Enable streaming, paging |

| Unauthorized | Missing token | Rotate and scope credentials |

Version both schemas and capabilities so clients can gracefully adapt over time.

Real-World MCP Use Cases

| Use Case | Example Servers | Outcome |

| AI Knowledge Assistant | KB + Web Fetch | Grounded contextual Q&A |

| DevOps Agent | FS + Git | Safe code changes and audits |

| Data Concierge | Cloud + KB | Enterprise data search |

| Governance Bot | All servers | Full observability and compliance |

Conclusion — The Future of Contextual Interoperability

The Model Context Protocol is redefining how intelligent systems interact.

By bridging reasoning and execution, it transforms isolated tools into cooperative cognitive ecosystems.

Whether it’s reading code, fetching docs, or managing cloud data — MCP ensures everything runs securely, transparently, and interoperably.

As AI evolves, interoperability is intelligence.

And MCP is how you build it.

Frequently Asked Questions

It standardizes communication between AI clients and servers for tool discovery, schema exchange, and structured interoperability.

MCP focuses on model-context exchange, not raw HTTP. It’s bidirectional, schema-driven, and purpose-built for AI reasoning.

Open SDKs exist in Node.js, Python, and Go — allowing you to build or consume MCP servers easily.

Yes. Agents can connect to multiple servers (Git, KB, Cloud) simultaneously under one orchestration plan.

Yes — with token-based auth, validation, and audit logging, it’s designed for production-grade safety and observability.