What Are AI Orchestration Frameworks and Why Do They Matter?

The rise of Agentic AI has created a new challenge:

Large Language Models are powerful thinkers, but terrible coordinators.

They reason well, but they struggle to:

- Manage tool sequences

- Handle long-running workflows

- Preserve state across steps

- Coordinate multiple agents

- Interact with external APIs consistently

- Recover from failures

- Log and trace their actions

This is where AI orchestration frameworks enter the picture.

Orchestration frameworks provide the infrastructure that allows LLMs to behave like real software systems structured, reliable, observable, and predictable.

In this guide, we’ll break down the major orchestration frameworks powering agentic systems today:

LangGraph, CrewAI, Semantic Kernel, and LlamaIndex.

We’ll explore how they work, what makes each one unique, and where they fit inside modern AI architectures.

This is your Tier-1 hub — built for clarity, for search engines, and for developers who want to understand this rapidly evolving landscape.

What Is AI Orchestration? (The Foundation of Agentic Systems)

AI orchestration refers to the system that manages, coordinates, and controls LLM-driven workflows.

If agentic AI is the “brain,” orchestration is:

- The spine (state & memory)

- The nervous system (signals & tool calls)

- The muscles (execution)

The safety system (constraints & error handling)

It ensures that:

- Steps occur in the right order

- Memory persists across interactions

- Tools are used safely

- Multi-agent teams collaborate

- Errors, retries, and fallbacks are handled

- Everything is logged and observable

Without orchestration, an LLM remains a chat interface — not an agent.

Why Orchestration Frameworks Are Essential in Agentic AI

AI orchestration frameworks solve real engineering challenges:

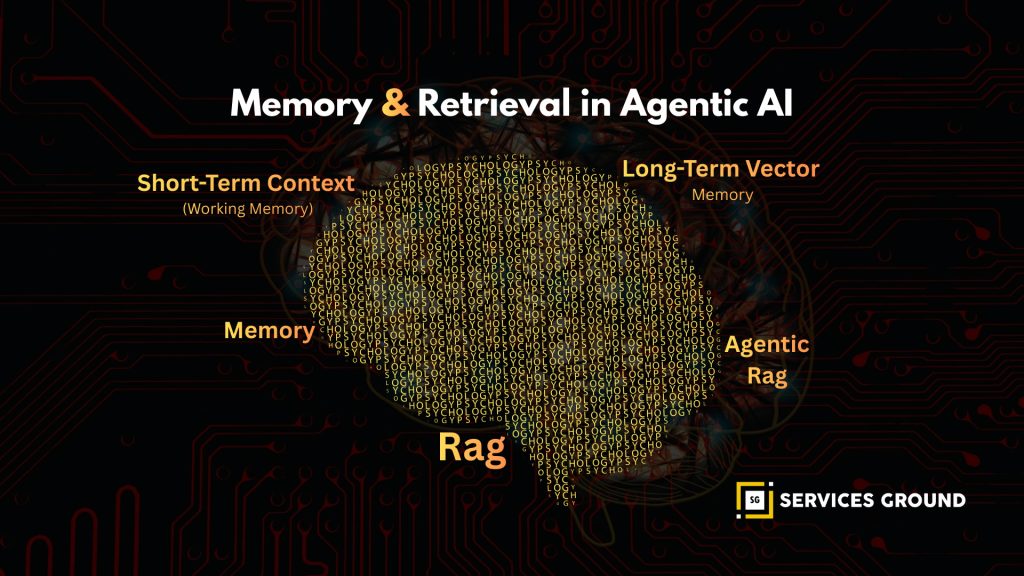

1. State Management

LLMs don’t remember context across long workflows.

Frameworks maintain state so agents don’t “forget” their objectives.

2. Deterministic Tool Execution

LLMs hallucinate function calls.

Frameworks enforce correct tool schemas and execution rules.

3. Multi-Step & Multi-Agent Flows

Agents often need to collaborate — researcher → planner → executor → evaluator.

Orchestration manages these handoffs.

4. Observability & Debugging

Produces logs, traces, and replayable sessions.

5. Safety & Human-in-the-Loop

You can add governance gates and approval checkpoints.

The Core Components of an AI Orchestration Framework

Every modern orchestration framework includes five architectural pillars:

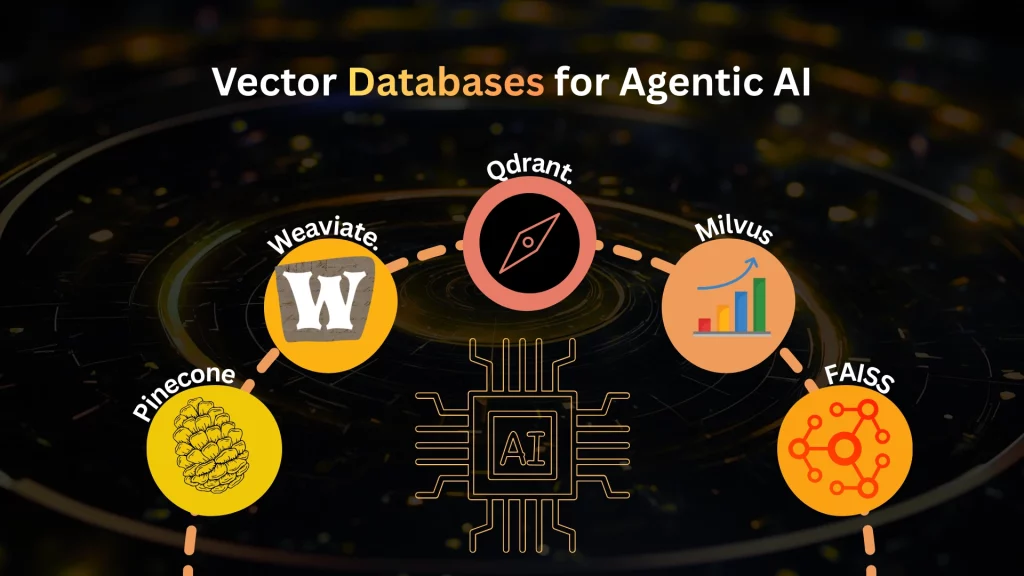

- Graph / DAG-based flow control

- State stores & memory systems

- Tool / function caller integration

- Multi-agent coordination

- Observability, retries & safety constraints

The difference between frameworks lies in how they implement these pillars.

Below is a breakdown framework-by-framework.

LangGraph — Graph-Native Orchestration for Agent Workflows

LangGraph is quickly becoming the industry standard for agent orchestration.

It builds on the LangChain ecosystem but focuses entirely on:

- Stateful agent workflows

- Graphs instead of linear chains

- Recoverable, traceable execution

- Deterministic tool use

Why LangGraph Is Dominating the Ecosystem

- Graph-based reasoning (nodes = steps, edges = transitions)

- Persistent state via checkpointers

- Human-in-the-loop at any node

- Multi-agent collaboration

- Built-in observability dashboard

Perfect for ReAct, ToT, and custom cognitive loops

LangGraph is ideal when you want workflows that feel like software, not chat sessions.

Real-World Use Cases

- Autonomous research agents

- Code generation pipelines

- Customer support agent teams

- Long-running automation flows

Multi-agent reasoning with explicit state

CrewAI — Role-Based Multi-Agent Collaboration

CrewAI focuses on teams of AI agents working together, similar to human project teams.

Instead of graphs, CrewAI uses:

- Roles (researcher, strategist, planner, analyst)

- Tasks (structured objectives)

Process flows (sequential or parallel)

Why CrewAI Is Unique

- It mirrors real-world team collaboration

- Simple mental model: assign roles → define tasks → run a crew

- Built-in multi-agent communication

- Tool integration for each agent

Memory persistence and history tracking

Best For

- Research teams

- Marketing + content workflows

- Product research

- Software agent teams

- Knowledge work automation

CrewAI is the “task force” of orchestration frameworks — perfect for multi-agent intelligence.

Semantic Kernel — Enterprise-Grade AI Orchestration

Microsoft’s Semantic Kernel (SK) is purpose-built for enterprise AI workflows, emphasizing:

- Safety

- Policy enforcement

- Repeatable skill definitions

Integration with enterprise systems (Azure, Microsoft 365, APIs)

What Makes Semantic Kernel Enterprise-Class

- Plugins & skills to containerize logic

- Planner system for LLM-driven decomposition

- Native grounding in vector stores

- Full reproducibility & deterministic execution

- Support for multi-step pipelines and tool orchestration

Best For

- Large organizations with compliance needs

- Enterprise automation

- Policy-controlled agentic systems

- Regulated industries (finance, healthcare)

SK is not the most experimental — but it’s one of the most stable and safe frameworks.

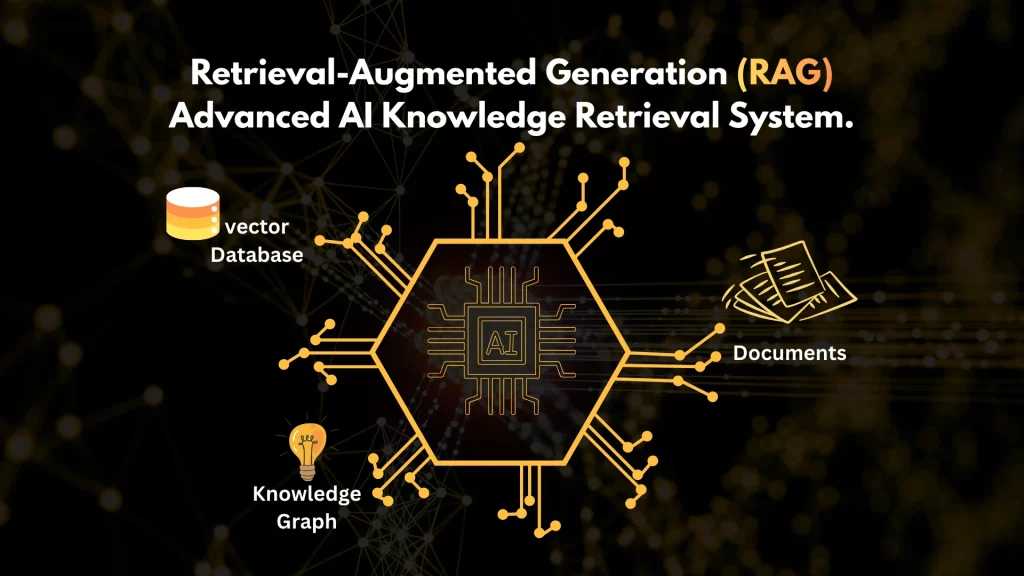

LlamaIndex — Data-Centric Orchestration

LlamaIndex is often thought of as a retrieval framework, but recent updates make it a strong orchestration tool, especially in scenarios that require:

- Knowledge graph integration

- Document agent pipelines

Structured tool calling based on retrieved context

Why LlamaIndex Matters for Orchestration

- Query engines with agent routing

- Step-by-step workflows for RAG agents

- Autonomous document workflows

- Flexible agent/router architecture

Best For

- Knowledge-heavy agents

- Enterprise RAG systems

- Document enrichment and processing

- Context-aware agent execution

It shines wherever data + reasoning must be tightly integrated.

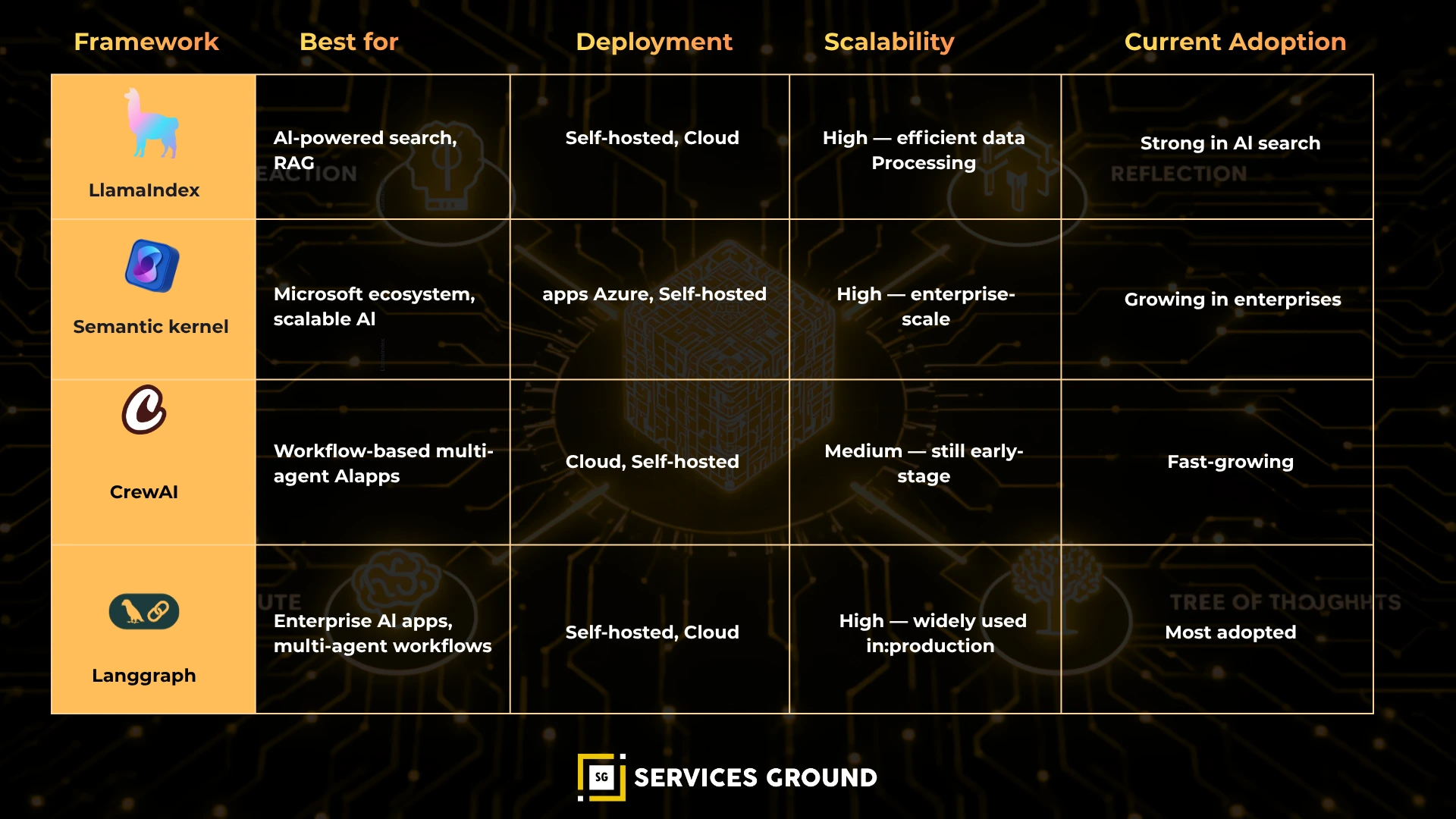

Side-by-Side Comparison of Orchestration Frameworks

| Feature | LangGraph | CrewAI | Semantic Kernel | LlamaIndex |

| Focus | Graph workflows | Team collaboration | Enterprise orchestration | Data-oriented workflows |

| Best For | Multi-step pipelines | Multi-agent teams | Compliance-heavy orgs | RAG + knowledge systems |

| State Management | Strong | Medium | Strong | Good |

| Tool Calling | Excellent | Good | Strong | Good |

| Observability | Advanced | Medium | Advanced | Medium |

| Learning Curve | Medium | Easy | Medium | Easy |

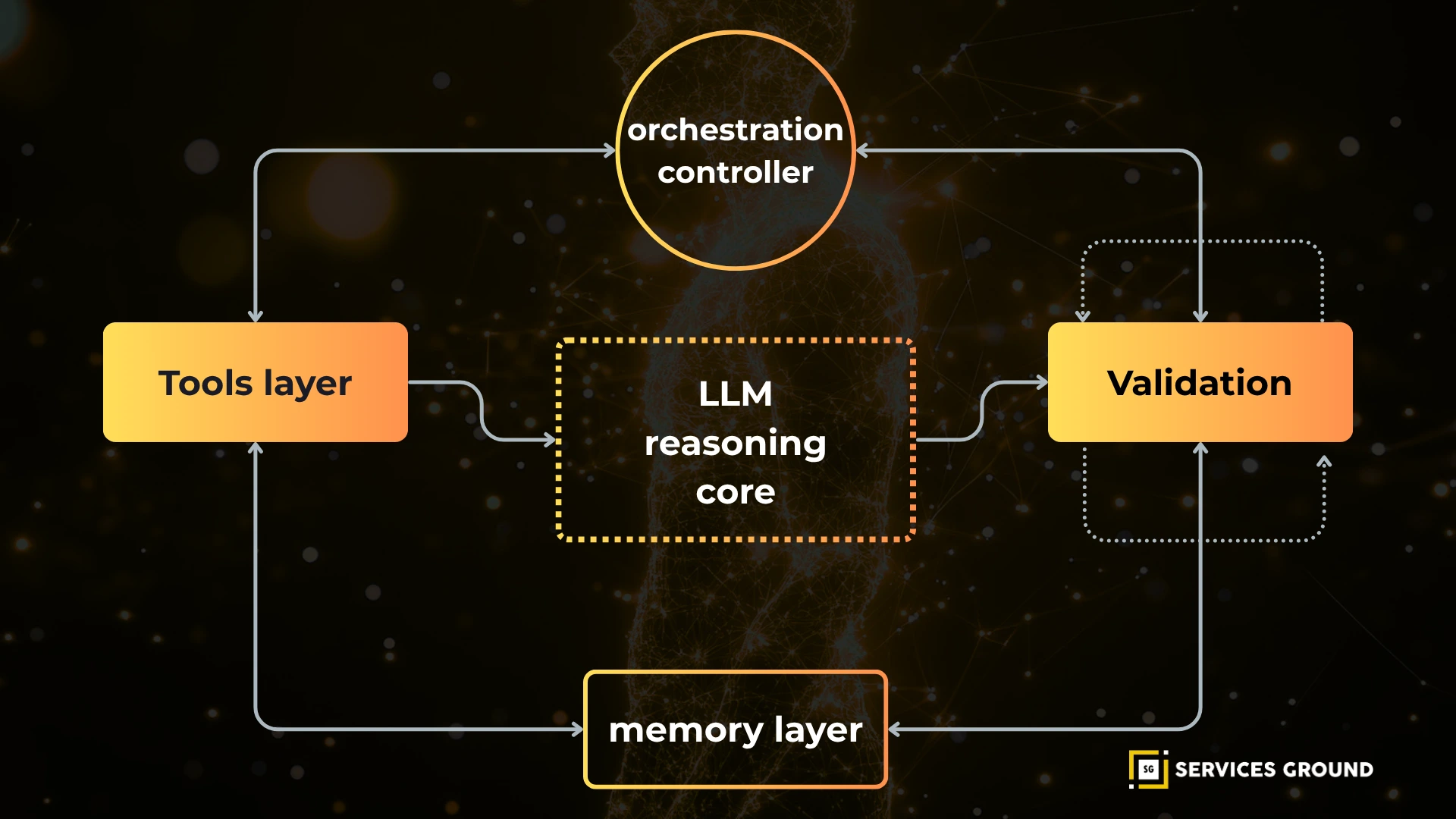

How Orchestration Fits Into an Agentic AI Architecture

A production agent system includes:

- LLM (reasoning brain)

- Tooling layer (actions)

- Memory layer (short + long-term)

- Validation guardrails

- Orchestration (workflow controller)

- Observability (logs, traces, events)

Orchestration frameworks sit right in the center — connecting everything else.

When to Use Which Framework

Use LangGraph if:

- You need stable, stateful, recoverable workflows

- You have multiple tools or agents

- You want graph logic for branching & loops

Use CrewAI if:

- You want role-based multi-agent teams

- You prefer simple task definitions

- You automate research, content, or planning tasks

Use Semantic Kernel if:

- You build enterprise-grade apps

- You need governance and reproducibility

- You must integrate deeply with Azure/Microsoft tools

Use LlamaIndex if:

- Your agent relies heavily on documents and context

- You need advanced RAG + agent integration

Your workflows revolve around data transformation

Best Practices for Building Orchestrated Agentic Systems

- Plan your workflow before coding

- Define tool schemas tightly (avoid ambiguity)

- Add fallbacks, retries, breakpoints

- Enable observability from day one

- Add human-in-the-loop for critical paths

- Persist state for long-running tasks

Use version-controlled skills/functions

Conclusion — The Backbone of Agentic AI

Orchestration frameworks transform LLMs from “smart assistants” into autonomous systems.

Whether it’s LangGraph’s graph logic, CrewAI’s collaborative agents, Semantic Kernel’s enterprise governance, or LlamaIndex’s data-centric workflows — orchestration is what makes intelligent systems reliable, safe, and scalable.

Frequently Asked Questions

A system that coordinates LLM agents, tools, memory, and workflows to ensure reliable, repeatable AI behavior.

LangGraph for graphs, CrewAI for multi-agent tasks, Semantic Kernel for enterprise, LlamaIndex for knowledge workflows.

Workflow automation is static; AI orchestration is dynamic, reasoning-driven, and adaptive.

No — LangChain is a toolkit. LangGraph is the orchestration layer.