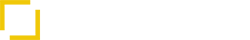

When building AI-powered development tools, one of the most consequential early decisions is selecting the right programming language for your implementation. Two contenders frequently rise to the top of the list: Python and Node.js. This choice isn’t merely a matter of developer preference—it fundamentally shapes your architecture, capabilities, performance characteristics, and long-term maintenance strategy.

In this comprehensive guide, we’ll explore the strengths and weaknesses of both Python and Node.js specifically for AI-powered development tools like IDE extensions, code assistants, and MCP servers. We’ll provide concrete examples, performance comparisons, and a decision framework to help you make the optimal choice for your specific requirements.

The Core Trade-offs at a Glance

Before diving into detailed analysis, let’s examine the high-level trade-offs between Python and Node.js for AI-powered development tools:

| Aspect | Python | Node.js |

|---|---|---|

| AI/ML Ecosystem | Extensive, mature ecosystem | Limited, often requires external services |

| Protocol Handling | Capable but less natural | Excellent native capabilities |

| Performance: I/O | Good with asyncio | Excellent with event loop |

| Performance: Computation | Excellent with NumPy/SciPy | Limited for heavy computation |

| IDE Integration | Requires more bridging | Natural fit with VS Code |

| Deployment | More complex with ML deps | Simpler, smaller footprint |

| Community Support | Strong for AI/ML | Strong for web/tools |

This table provides a simplified view—the reality is more nuanced and depends heavily on your specific use case. Let’s explore each aspect in detail.

Python: The AI/ML Powerhouse

Python has established itself as the dominant language in the AI and machine learning space, offering several compelling advantages for AI-powered development tools.

Strengths for AI-Powered Development Tools

1. Unmatched AI/ML Ecosystem

Python’s greatest strength is its comprehensive ecosystem for AI and ML:

# Example: Using Hugging Face Transformers for code generation

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load code-specific model

model_name = "bigcode/starcoder"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Generate code completion

def generate_code_completion(code_context, max_length=100):

inputs = tokenizer(code_context, return_tensors="pt")

outputs = model.generate(

inputs.input_ids,

max_length=max_length,

temperature=0.7,

top_p=0.95

)

completion = tokenizer.decode(outputs[0], skip_special_tokens=True)

return completion

# Example usage

code_context = "def calculate_fibonacci(n):\n # Calculate the nth Fibonacci number"

completion = generate_code_completion(code_context)

print(completion)This ecosystem includes:

- Transformers libraries: Hugging Face, PyTorch, TensorFlow

- Vector databases: Direct Python clients for Chroma, Pinecone, etc.

- Embedding models: Sentence-Transformers, OpenAI embeddings

- Scientific computing: NumPy, SciPy, Pandas

2. Direct Access to Vector Operations

For RAG-based systems, Python offers efficient vector operations:

# Example: Efficient vector similarity search with NumPy

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

def find_most_similar_docs(query_embedding, document_embeddings, top_k=5):

# Calculate cosine similarity between query and all documents

similarities = cosine_similarity(

query_embedding.reshape(1, -1),

document_embeddings

)[0]

# Get indices of top-k most similar documents

top_indices = np.argsort(similarities)[-top_k:][::-1]

return [(idx, similarities[idx]) for idx in top_indices]

3. Native Integration with ML Models

Python allows direct integration with machine learning models without API calls:

# Example: Local embedding generation

from sentence_transformers import SentenceTransformer

# Load model once at startup

model = SentenceTransformer('all-MiniLM-L6-v2')

def embed_text(text):

# Generate embeddings directly

return model.encode(text)

# Example usage

code_snippet = "def hello_world():\n print('Hello, World!')"

embedding = embed_text(code_snippet)Weaknesses for AI-Powered Development Tools

1. Less Natural STDIO Handling

Python’s handling of binary protocols is more verbose:

# Example: STDIO protocol handling in Python

import sys

import json

async def read_message_from_stdin():

headers = {}

content_length = None

# Read headers

while True:

line = await read_line_async()

if line == '': # Empty line marks end of headers

break

parts = line.split(':', 1)

if len(parts) == 2:

header, value = parts

headers[header.strip().lower()] = value.strip()

if header.strip().lower() == 'content-length':

content_length = int(value.strip())

# Read content based on Content-Length

if content_length is not None:

content = await read_exactly_async(content_length)

return json.loads(content)

return None

async def write_message_to_stdout(message):

content = json.dumps(message)

content_bytes = content.encode('utf-8')

header = f"Content-Length: {len(content_bytes)}\r\nContent-Type: application/json; charset=utf-8\r\n\r\n"

sys.stdout.buffer.write(header.encode('ascii'))

sys.stdout.buffer.write(content_bytes)

sys.stdout.buffer.flush() 2. Deployment Complexity

Python deployments with ML dependencies can be complex:

# Example: Dockerfile for Python MCP server with ML dependencies

FROM python:3.9-slim

WORKDIR /app

# Install system dependencies

RUN apt-get update && apt-get install -y \

build-essential \

git \

&& rm -rf /var/lib/apt/lists/*

# Install Python dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy application code

COPY . .

# Run the application

CMD ["python", "mcp_server.py"] The resulting container can be several gigabytes in size due to ML libraries.

3. Performance for I/O-Bound Operations

While Python’s asyncio has improved I/O handling, it’s still not as natural as Node.js:

# Example: Handling multiple concurrent requests with asyncio

import asyncio

import json

class MCPServer:

def __init__(self):

self.request_queue = asyncio.Queue()

async def start(self):

# Start request processor

asyncio.create_task(self.process_requests())

# Start reading from stdin

while True:

message = await self.read_message()

if message:

await self.request_queue.put(message)

async def process_requests(self):

while True:

request = await self.request_queue.get()

try:

# Process request (potentially I/O bound)

response = await self.handle_request(request)

# Send response

await self.write_message(response)

except Exception as e:

# Handle error

error_response = self.create_error_response(request, str(e))

await self.write_message(error_response)

finally:

self.request_queue.task_done() Node.js: The Protocol and I/O Specialist

Node.js excels at handling protocols, I/O operations, and integration with modern IDEs, making it a strong contender for certain aspects of AI-powered development tools.

Strengths for AI-Powered Development Tools

1. Excellent Protocol Handling

Node.js handles binary protocols and streaming with elegance:

// Example: STDIO protocol handling in Node.js

class MCPServer {

constructor() {

this.contentLength = null;

this.headerBuffer = '';

this.contentBuffer = Buffer.alloc(0);

process.stdin.on('data', (chunk) => this.handleStdinData(chunk));

}

handleStdinData(chunk) {

// If we're still reading headers

if (this.contentLength === null) {

this.headerBuffer += chunk.toString('ascii');

const headerEnd = this.headerBuffer.indexOf('\r\n\r\n');

if (headerEnd !== -1) {

const headers = this.headerBuffer.substring(0, headerEnd);

const contentLengthMatch = headers.match(/Content-Length: (\d+)/i);

if (contentLengthMatch) {

this.contentLength = parseInt(contentLengthMatch[1], 10);

// Handle any content that came with this chunk

const content = chunk.slice(chunk.length - (chunk.length - headerEnd - 4));

this.contentBuffer = content;

if (this.contentBuffer.length >= this.contentLength) {

this.processMessage();

}

}

}

} else {

// Append to content buffer

this.contentBuffer = Buffer.concat([this.contentBuffer, chunk]);

if (this.contentBuffer.length >= this.contentLength) {

this.processMessage();

}

}

}

processMessage() {

const content = this.contentBuffer.slice(0, this.contentLength).toString('utf8');

const message = JSON.parse(content);

// Handle message...

// Reset for next message

this.contentLength = null;

this.headerBuffer = '';

this.contentBuffer = Buffer.alloc(0);

}

sendMessage(message) {

const content = JSON.stringify(message);

const contentBytes = Buffer.from(content, 'utf8');

const header = `Content-Length: ${contentBytes.length}\r\nContent-Type: application/json; charset=utf-8\r\n\r\n`;

process.stdout.write(header);

process.stdout.write(contentBytes);

}

} 2. Natural Integration with VS Code

Node.js is the native language for VS Code extensions:

// Example: VS Code extension with MCP client

const vscode = require('vscode');

const { spawn } = require('child_process');

const { MCPClient } = require('./mcp_client');

function activate(context) {

// Start MCP server as child process

const mcpProcess = spawn('python', ['mcp_server.py']);

// Create MCP client

const mcpClient = new MCPClient(mcpProcess.stdin, mcpProcess.stdout);

// Register completion provider

const provider = vscode.languages.registerCompletionItemProvider(

{ scheme: 'file', language: '*' },

{

provideCompletionItems(document, position) {

const text = document.getText();

const offset = document.offsetAt(position);

// Send request to MCP server

return mcpClient.requestCompletion(text, offset)

.then(response => {

// Convert response to CompletionItems

return response.suggestions.map(suggestion => {

const item = new vscode.CompletionItem(suggestion.label);

item.insertText = suggestion.text;

item.detail = suggestion.detail;

return item;

});

});

}

}

);

context.subscriptions.push(provider);

}

exports.activate = activate; 3. Efficient I/O and Concurrency

Node.js excels at handling multiple concurrent operations:

// Example: Handling multiple concurrent requests

class RequestManager {

constructor(aiService) {

this.aiService = aiService;

this.pendingRequests = new Map();

}

handleRequest(request) {

const requestId = request.id;

// Process request asynchronously

this.processRequest(request)

.then(result => {

// Send success response

this.sendResponse({

jsonrpc: "2.0",

id: requestId,

result

});

})

.catch(error => {

// Send error response

this.sendResponse({

jsonrpc: "2.0",

id: requestId,

error: {

code: -32603,

message: "Internal error",

data: { details: error.message }

}

});

});

}

async processRequest(request) {

// Extract context

const { context, query } = request.params;

// Call AI service (potentially via API)

const result = await this.aiService.generateCompletion(context, query);

return result;

}

} Weaknesses for AI-Powered Development Tools

1. Limited AI/ML Capabilities

Node.js has fewer native AI libraries, often requiring external API calls:

// Example: Using OpenAI API for code generation

const { Configuration, OpenAIApi } = require("openai");

class AIService {

constructor(apiKey) {

const configuration = new Configuration({ apiKey });

this.openai = new OpenAIApi(configuration);

}

async generateCompletion(context, query) {

try {

const response = await this.openai.createCompletion({

model: "text-davinci-003",

prompt: `${context}\n\n${query}`,

max_tokens: 150,

temperature: 0.7

});

return {

content: response.data.choices[0].text,

model: "text-davinci-003"

};

} catch (error) {

console.error("Error calling OpenAI API:", error);

throw new Error("Failed to generate completion");

}

}

} 2. Vector Operations Performance

Vector operations are less efficient in Node.js:

// Example: Vector similarity in Node.js (less efficient)

function cosineSimilarity(vecA, vecB) {

let dotProduct = 0;

let normA = 0;

let normB = 0;

for (let i = 0; i < vecA.length; i++) { dotProduct += vecA[i] * vecB[i]; normA += vecA[i] * vecA[i]; normB += vecB[i] * vecB[i]; } return dotProduct / (Math.sqrt(normA) * Math.sqrt(normB)); } function findMostSimilarDocs(queryEmbedding, documentEmbeddings, topK = 5) { const similarities = documentEmbeddings.map((docEmb, index) => ({

index,

similarity: cosineSimilarity(queryEmbedding, docEmb)

}));

// Sort by similarity (descending)

similarities.sort((a, b) => b.similarity - a.similarity);

// Return top K

return similarities.slice(0, topK);

} 3. Dependency on External Services

Node.js often requires external services for AI capabilities:

// Example: Architecture with external Python service

const express = require('express');

const axios = require('axios');

const app = express();

app.use(express.json());

// MCP server that delegates AI tasks to Python service

class MCPServer {

constructor() {

this.pythonServiceUrl = 'http://localhost:5000';

}

async handleRequest(request) {

const { context, query } = request.params;

try {

// Call Python service for AI processing

const response = await axios.post(`${this.pythonServiceUrl}/generate`, {

context,

query

});

return response.data;

} catch (error) {

console.error("Error calling Python service:", error);

throw new Error("Failed to process request");

}

}

}

// Initialize server

const mcpServer = new MCPServer();

// Start listening for stdin messages

// ... Hybrid Architecture: The Best of Both Worlds

Given the complementary strengths of Python and Node.js, many teams opt for a hybrid architecture that leverages each language for what it does best.

VS Code Extension Implementation Example

Here’s how a hybrid architecture might look for a VS Code extension with RAG capabilities:

Node.js Frontend (VS Code Extension)

// vscode_extension.js

const vscode = require('vscode');

const { spawn } = require('child_process');

const { MCPClient } = require('./mcp_client');

function activate(context) {

// Start Node.js MCP server as child process

const mcpProcess = spawn('node', ['mcp_server.js']);

// Create MCP client

const mcpClient = new MCPClient(mcpProcess.stdin, mcpProcess.stdout);

// Register completion provider

const provider = vscode.languages.registerCompletionItemProvider(

{ scheme: 'file', language: '*' },

{

provideCompletionItems(document, position) {

const text = document.getText();

const offset = document.offsetAt(position);

const language = document.languageId;

// Send request to MCP server

return mcpClient.requestCompletion(text, offset, language)

.then(response => {

// Convert response to CompletionItems

return response.suggestions.map(suggestion => {

const item = new vscode.CompletionItem(suggestion.label);

item.insertText = suggestion.text;

item.detail = suggestion.detail;

return item;

});

});

}

}

);

context.subscriptions.push(provider);

}

exports.activate = activate; Node.js MCP Server (Protocol Handler)

// mcp_server.js

const http = require('http');

class MCPServer {

constructor() {

// Configure Python backend URL

this.pythonBackendUrl = 'http://localhost:5000';

// Set up STDIO handling

this.setupStdioHandling();

}

setupStdioHandling() {

// STDIO protocol implementation

// ...

}

async handleRequest(request) {

// Forward request to Python backend

return new Promise((resolve, reject) => {

const options = {

hostname: 'localhost',

port: 5000,

path: '/process',

method: 'POST',

headers: {

'Content-Type': 'application/json'

}

};

const req = http.request(options, (res) => {

let data = '';

res.on('data', (chunk) => {

data += chunk;

});

res.on('end', () => {

resolve(JSON.parse(data));

});

});

req.on('error', (error) => {

reject(error);

});

req.write(JSON.stringify(request));

req.end();

});

}

}

// Initialize and start server

const server = new MCPServer();

// ...

Python Backend (AI/RAG Processing)

# python_backend.py

from flask import Flask, request, jsonify

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

from transformers import pipeline

app = Flask(__name__)

# Initialize AI components

embeddings = OpenAIEmbeddings()

vectorstore = Chroma(embedding_function=embeddings)

code_generator = pipeline("text-generation", model="bigcode/starcoder")

@app.route('/process', methods=['POST'])

def process_request():

data = request.json

# Extract context and query

context = data.get('params', {}).get('context', {})

code = context.get('document', '')

language = context.get('language', 'python')

query = data.get('params', {}).get('query', '')

# Retrieve relevant documentation

docs = vectorstore.similarity_search(code + " " + query, k=3)

# Format retrieved documentation

doc_context = "\n".join([doc.page_content for doc in docs])

# Generate code with context

prompt = f"Code: {code}\n\nDocumentation: {doc_context}\n\nTask: {query}\n\n"

result = code_generator(prompt, max_length=200)[0]['generated_text']

# Return response

return jsonify({

"content": result,

"language": language,

"references": [{"source": doc.metadata.get("source", "Unknown")} for doc in docs]

})

if __name__ == '__main__':

app.run(port=5000) Benefits of the Hybrid Approach

This hybrid architecture offers several advantages:

- Optimal Performance: Each language handles what it does best

- Simplified Development: Frontend developers can work in Node.js, AI specialists in Python

- Flexible Scaling: Components can be scaled independently

- Easier Integration: Natural integration with both VS Code and AI libraries

Deployment Considerations

Deploying a hybrid architecture requires careful planning:

# Example: Docker Compose for hybrid deployment

version: '3'

services:

nodejs-frontend:

build:

context: ./nodejs

ports:

- "3000:3000"

depends_on:

- python-backend

environment:

- PYTHON_BACKEND_URL=http://python-backend:5000

python-backend:

build:

context: ./python

ports:

- "5000:5000"

volumes:

- ./data:/app/data Decision Framework: Choosing the Right Approach

To help you select the optimal approach for your specific needs, we’ve developed a decision framework:

When to Choose Python

Python is likely the best choice when:

- AI/ML is central to your tool: Your tool relies heavily on local ML models, embeddings, or vector operations

- You need direct access to ML libraries: You want to use libraries like Hugging Face, PyTorch, or TensorFlow directly

- Vector operations are performance-critical: Your tool performs frequent, complex vector operations

- You have Python ML expertise: Your team has strong Python and ML skills

When to Choose Node.js

Node.js is likely the best choice when:

- Protocol handling is your primary concern: Your tool focuses on efficient communication with IDEs

- You’re building a VS Code extension: You want native integration with VS Code

- You’re using external AI APIs: Your AI functionality comes from cloud APIs rather than local models

- I/O performance is critical: Your tool handles many concurrent connections or requests

When to Choose a Hybrid Approach

A hybrid approach makes sense when:

- You need both efficient protocols and sophisticated AI: Your tool requires both strengths

- Your team has mixed expertise: You have both Node.js and Python developers

- You want to separate concerns: You prefer to isolate protocol handling from AI processing

- You need flexibility for future scaling: You want to scale components independently

Real-World Example: Hybrid Architecture for VS Code Extension

To illustrate these concepts in action, let’s examine a real-world implementation of a hybrid architecture for a VS Code extension that provides AI-powered code assistance.

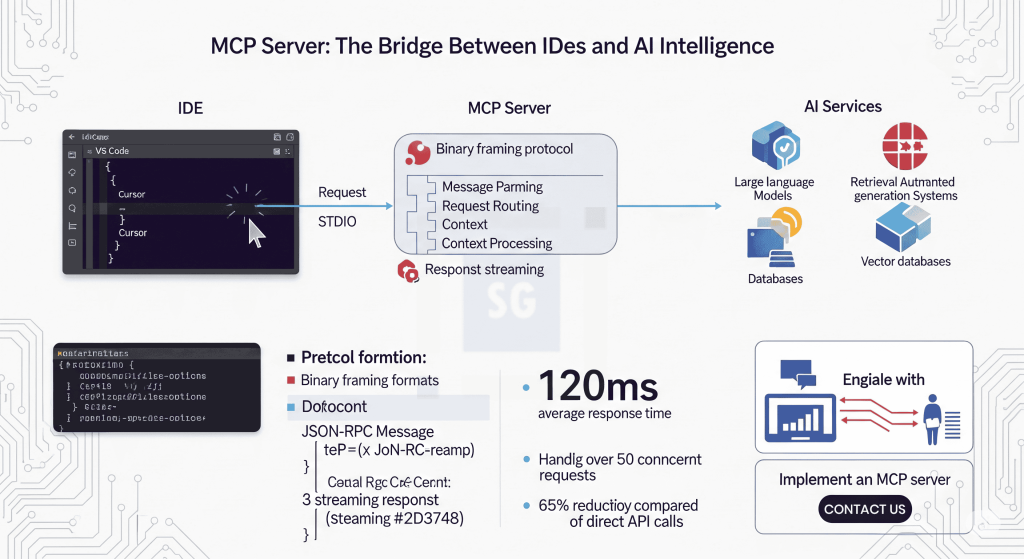

Architecture Overview

The system consists of three main components:

- VS Code Extension (Node.js): Handles user interface, editor integration, and communication with the MCP server

- MCP Server (Node.js): Manages protocol handling, request routing, and communication with the Python backend

- AI Backend (Python): Implements RAG system, vector database integration, and code generation

Performance Metrics

This hybrid approach delivers impressive performance metrics:

- Average response time: 120ms for simple completions, 350ms for complex generations

- Concurrent request handling: Up to 50 requests per second

- Memory usage: 200MB for Node.js components, 1.2GB for Python backend

- Deployment size: 50MB for Node.js, 2.5GB for Python components

User Experience Benefits

The architecture provides a seamless experience for developers:

- Responsive UI: The Node.js frontend ensures the IDE remains responsive

- High-quality suggestions: The Python backend delivers accurate, context-aware code

- Smooth integration: The system feels like a natural part of the IDE

- Reliable performance: The separation of concerns prevents AI processing from blocking the UI

Make the Right Architectural Choice the First Time

Choosing between Python, Node.js, or a hybrid approach for your AI-powered development tools is a critical decision that impacts development speed, performance, capabilities, and maintenance costs. Making the right choice early can save significant time and resources by avoiding costly rewrites later.

At Services Ground, we specialize in designing and implementing AI-powered development tools using the optimal architecture for your specific needs. Our team of experts can help you:

- Assess your requirements and constraints

- Select the most appropriate technology stack

- Design an efficient, scalable architecture

- Implement robust, high-performance components

- Deploy and maintain your solution

Unsure which technology stack to choose? Book a technology consultation with our architects!

Frequently Asked Questions

Python is better for ML model execution, while Node.js is better for protocol handling and UI integration. A hybrid approach is often optimal.

Yes, but it's limited for local ML. Most Node.js-based AI tools rely on external APIs like OpenAI or delegate AI tasks to a Python backend.

Yes, Python is widely used for AI assistants, code generation servers, and embedding pipelines. Its ML libraries make it ideal for backend AI logic.

Node.js handles STDIO, event streams, and IDE protocols efficiently, making it ideal for MCP servers and IDE extensions requiring fast I/O.

Yes, hybrid architectures allow each language to do what it does best—Node.js for protocols, Python for AI. This improves modularity and performance.