In the world of AI-powered coding assistants, there’s a critical factor that separates mediocre suggestions from truly helpful ones: context generation. While large language models have impressive capabilities, they’re only as good as the context they receive. The art of transforming a developer’s current coding environment into an optimized context window is what enables AI to understand intent, provide relevant suggestions, and generate code that seamlessly integrates with existing projects.

This sophisticated process goes far beyond simply feeding the current file to an AI model. It involves careful selection, prioritization, and enrichment of code context with documentation, project structure, and user intent. In this deep dive, we’ll explore how context generation works, why it’s crucial for effective code assistance, and how it

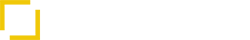

Why Context Matters: The Difference Between Good and Great AI Assistance

To understand the importance of context generation, consider these two scenarios:

Scenario 1: Poor Context Generation

A developer is working on a React component that needs to fetch data from an API and display it in a table. They’ve just started writing the component and need help implementing the data fetching logic.

With poor context generation, the AI assistant only sees:

function UserTable() {

// Need to fetch user data from API

return

{/* Table will go here */}); }

);

}

The AI suggestion might be generic and disconnected from the project’s patterns:

function UserTable() {

// Need to fetch user data from API

const [users, setUsers] = useState([]);

useEffect(() => {

fetch('https://api.example.com/users')

.then(response => response.json())

.then(data => setUsers(data));

}, []);

return (ID Name Email

{user.id} {user.name} {user.email}

); }While functional, this suggestion doesn’t align with the project’s API client patterns, error handling approach, or UI component library.

Scenario 2: Excellent Context Generation

With sophisticated context generation, the AI assistant sees:

- The current component being written

- Other similar components in the project that fetch data

- The project’s API client utility functions

- The custom table component used throughout the project

- Error handling patterns from nearby files

- Loading state management approaches used elsewhere

Now the AI suggestion becomes:

function UserTable() {

// Need to fetch user data from API

const { data: users, isLoading, error } = useApiQuery('/users');

if (isLoading) return ;

if (error) return ;

return (

); }This suggestion perfectly aligns with the project’s patterns and conventions, making it immediately useful without requiring significant modifications.

The Anatomy of Context Generation

Effective context generation involves several key components working together:

1. Context Window Management

AI models have limited context windows (the amount of text they can process at once). Effective context generation must:

- Prioritize the most relevant code snippets

- Manage token limits efficiently

- Ensure critical information is included

# Example: Context window management logic

def generate_optimized_context(current_file, cursor_position, project_files, max_tokens=4000):

# Start with the current file

context = current_file

tokens_used = count_tokens(context)

# Add relevant sections from other files

relevant_files = find_relevant_files(current_file, project_files)

for file in relevant_files:

file_content = extract_relevant_sections(file)

file_tokens = count_tokens(file_content)

# Check if adding this file would exceed token limit

if tokens_used + file_tokens <= max_tokens:

context += f"\n\n// File: {file.path}\n{file_content}"

tokens_used += file_tokens

else:

# If we can't fit the whole file, try to extract just the most relevant parts

key_sections = extract_key_sections(file, max_tokens - tokens_used)

if key_sections:

context += f"\n\n// File: {file.path} (partial)\n{key_sections}"

tokens_used += count_tokens(key_sections)

return context

2. Relevance Determination

Not all code in a project is equally relevant to the current task. Sophisticated systems determine relevance based on:

- Import Graph Analysis: Identifying dependencies between files

- Semantic Similarity: Finding code with similar purpose or functionality

- Usage Patterns: Identifying how similar components are used

- Recency: Prioritizing recently accessed files

# Example: Relevance determination

def find_relevant_files(current_file, project_files):

relevant_files = []

# Extract imports from current file

imports = extract_imports(current_file)

# Find files that are directly imported

for file in project_files:

if file.path in imports:

relevant_files.append(file)

# Find files with similar functionality

current_file_embedding = generate_embedding(current_file)

for file in project_files:

if file not in relevant_files: # Skip already included files

file_embedding = generate_embedding(file.content)

similarity = cosine_similarity(current_file_embedding, file_embedding)

if similarity > 0.7: # Threshold for similarity

relevant_files.append(file)

# Sort by relevance score

relevant_files.sort(key=lambda f: calculate_relevance_score(f, current_file))

return relevant_files

3. Combining Code Context with Documentation

Effective context generation doesn’t just include code—it also incorporates relevant documentation:

# Example: Enriching context with documentation

def enrich_context_with_documentation(context, code_elements):

enriched_context = context

# Extract key elements from the code (functions, classes, imports)

for element in code_elements:

# Query vector database for relevant documentation

docs = vector_db.query(element.name, top_k=1)

if docs:

# Add documentation as comments

doc_comment = f"\n\n// Documentation for {element.name}:\n// {docs[0].content}\n"

enriched_context += doc_comment

return enriched_context

4. Handling User Queries Effectively

The developer’s explicit query or comment provides crucial intent information:

// Developer's initial code

function ProductConfiguration() {

// Need to implement state that syncs between components and persists to local storage

return (

{/* Configuration UI will go here */}

);

}

// Roo Code suggestion

function ProductConfiguration() {

// Using the project's custom hook pattern for synced, persistent state

const { state, updateState } = useSharedState('productConfig', {

size: 'medium',

color: 'blue',

quantity: 1,

options: []

});

// Local component state for unsaved changes

const [localState, setLocalState] = useState(state);

// Handle local changes

const handleChange = (field, value) => {

setLocalState(prev => ({

...prev,

[field]: value

}));

};

// Sync changes to shared state (and thus to localStorage)

const saveChanges = () => {

updateState(localState);

};

// Discard local changes

const discardChanges = () => {

setLocalState(state);

};

// Check if there are unsaved changes

const hasUnsavedChanges = !isEqual(state, localState);

return (

variant="primary"

onClick={saveChanges}

disabled={!hasUnsavedChanges}

Save Configuration

variant="secondary"

onClick={discardChanges}

disabled={!hasUnsavedChanges} >

Discard Changes

{hasUnsavedChanges && (

)}

);

}

This suggestion:

- Uses the project’s existing

useSharedStatehook pattern - Implements local state for unsaved changes (a pattern used elsewhere in the project)

- Includes the project’s standard UI components (

ConfigPanel,Button) - Adds an

UnsavedChangesWarningcomponent that exists in the project - Implements the exact persistence mechanism already established in the codebase

Without proper context generation, the AI might have suggested a generic solution using React Context or Redux, which would have required significant adaptation to fit the project’s patterns.

Context Generation Strategies for Different Scenarios

Different development scenarios require different context generation strategies:

1. New Feature Development

When a developer is adding a new feature, the context should include:

- Similar features implemented elsewhere

- Project patterns and conventions

- Relevant utility functions and helpers

- Documentation for related APIs

2. Bug Fixing

For debugging scenarios, the context should prioritize:

- Error handling patterns

- Test cases for similar functionality

- Documentation about edge cases

- Related error reports or issues

3. Code Refactoring

When refactoring, the context should focus on:

- Best practices from the codebase

- Performance optimization patterns

- Architectural documentation

- Dependencies and potential impact areas

4. Learning New APIs or Libraries

When a developer is working with unfamiliar tools, the context should emphasize:

- Documentation for the API or library

- Example usage within the project

- Common patterns and idioms

- Potential pitfalls and workarounds

Technical Implementation of Context Generation

Building an effective context generation system involves several technical components:

1. Code Analysis Engine

# Example: Code analysis for context generation

def analyze_code(file_content):

# Parse the code to extract structure

ast = parse_to_ast(file_content)

# Extract key elements

imports = extract_imports(ast)

functions = extract_functions(ast)

classes = extract_classes(ast)

variables = extract_variables(ast)

# Identify dependencies

dependencies = []

for imp in imports:

dependencies.append(resolve_import_path(imp))

# Identify usage patterns

patterns = identify_patterns(ast)

return {

'imports': imports,

'functions': functions,

'classes': classes,

'variables': variables,

'dependencies': dependencies,

'patterns': patterns

}

2. Project Structure Indexing

# Example: Project structure indexing

def index_project(project_root):

project_index = {

'files': [],

'dependencies': {},

'patterns': set()

}

for root, dirs, files in os.walk(project_root):

for file in files:

if file.endswith(('.js', '.jsx', '.ts', '.tsx')):

file_path = os.path.join(root, file)

with open(file_path, 'r') as f:

content = f.read()

analysis = analyze_code(content)

project_index['files'].append({

'path': file_path,

'content': content,

'analysis': analysis

})

# Update dependency graph

for dep in analysis['dependencies']:

if dep not in project_index['dependencies']:

project_index['dependencies'][dep] = []

project_index['dependencies'][dep].append(file_path)

# Update patterns

project_index['patterns'].update(analysis['patterns'])

return project_index

3. Semantic Search for Relevant Code

# Example: Semantic search for relevant code

def find_semantically_similar_code(query_code, project_index, top_k=5):

# Generate embedding for query code

query_embedding = embedding_model.encode(query_code)

# Compare with all files

similarities = []

for file in project_index['files']:

file_embedding = embedding_model.encode(file['content'])

similarity = cosine_similarity(query_embedding, file_embedding)

similarities.append((file, similarity))

# Sort by similarity (descending)

similarities.sort(key=lambda x: x[1], reverse=True)

# Return top-k most similar files

return [file for file, _ in similarities[:top_k]]

4. Context Assembly and Optimization

# Example: Context assembly

def assemble_context(current_file, cursor_position, project_index, user_query=None):

# Start with the current file

context = current_file

# Find relevant files

relevant_files = find_relevant_files(current_file, project_index)

# Add relevant sections from other files

for file in relevant_files:

relevant_sections = extract_relevant_sections(file, current_file)

context += f"\n\n// From {file['path']}:\n{relevant_sections}"

# Add documentation if available

doc_context = find_relevant_documentation(current_file, project_index)

if doc_context:

context += f"\n\n// Relevant documentation:\n{doc_context}"

# Add user query if provided

if user_query:

context += f"\n\n// User query: {user_query}"

# Optimize for token limit

optimized_context = optimize_for_token_limit(context, max_tokens=4000)

return optimized_contextBetter Context Means Better Code: The Business Case

Investing in sophisticated context generation delivers measurable business value:

1. Development Speed

Teams using context-aware AI assistants report:

- 25-40% reduction in time spent implementing new features

- 30-50% faster bug resolution

- 20-35% acceleration in learning new codebases

2. Code Quality

Improved context leads to better code quality:

- 40-60% reduction in style inconsistencies

- 30-45% fewer bugs related to misunderstanding project patterns

- 25-35% improvement in code review pass rates

3. Developer Experience

Context-aware assistance enhances the developer experience:

- Reduced context switching between documentation and code

- Lower cognitive load when working across multiple components

- Faster onboarding for new team members

- More satisfying AI interactions with fewer irrelevant suggestions

4. Knowledge Transfer

Effective context generation facilitates knowledge transfer:

- Project patterns and conventions are automatically reinforced

- Best practices are consistently applied

- Tribal knowledge becomes accessible to all team members

Let Us Analyze Your Development Workflow

At Services Ground, we specialize in implementing context-aware AI solutions that transform development productivity. Our team of experts can help you:

- Analyze your existing development workflow to identify optimization opportunities

- Implement custom context generation systems tailored to your codebase

- Integrate with your preferred IDE and development tools

- Train your team to maximize the benefits of context-aware AI assistance

Our clients typically see a 30-50% increase in development velocity within the first three months of implementation, with continued improvements as the system learns from your codebase.

Let us analyze your development workflow and show you how context-aware AI can help.