Introduction — From Language to Action

Agentic AI represents the next evolution of artificial intelligence: systems that don’t just generate text, but act.

To operate autonomously, these agents need the ability to call external tools, invoke APIs, and return structured data that other systems can understand.

That’s where function calling and structured outputs come in.

These capabilities allow large language models (LLMs) to connect reasoning with real-world execution — from fetching weather data to managing calendars or querying databases.

This article explores how tool use works inside Agentic AI, why structured outputs make it reliable, and how frameworks like OpenAI, LangChain, Gemini, and Semantic Kernel implement it in practice.

What Is Tool Use in Agentic AI?

Tool use is the process where an agent moves beyond conversation to take action using external functions or APIs.

For example, when you ask an AI assistant,

“What’s the weather in Paris?”

Instead of guessing, it can invoke a weather API through a defined function such as get_weather(location) and return the verified temperature.

This behavior turns the LLM into a reasoning layer — it plans which function to call, executes it, interprets the result, and responds with a structured, reliable answer.

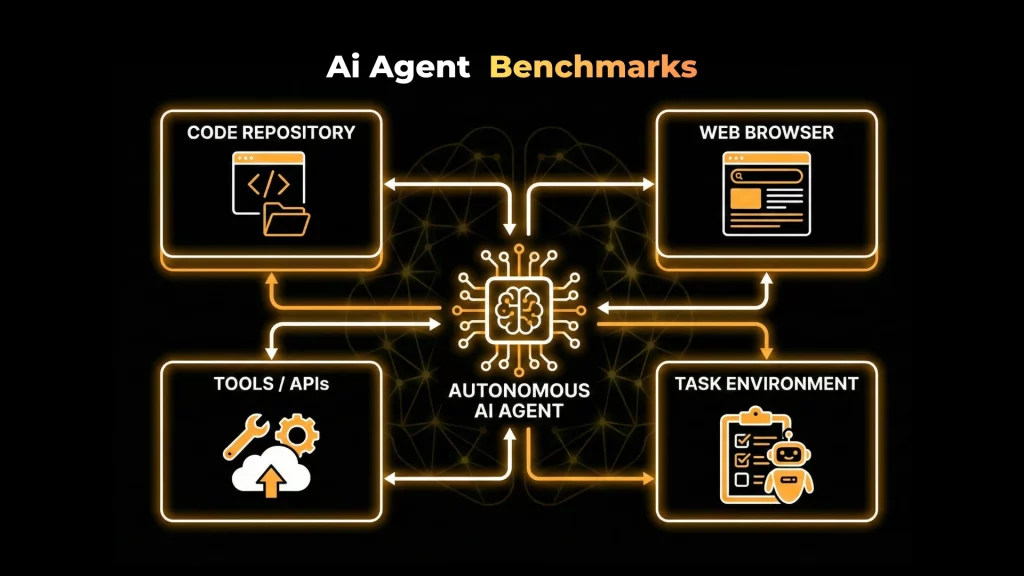

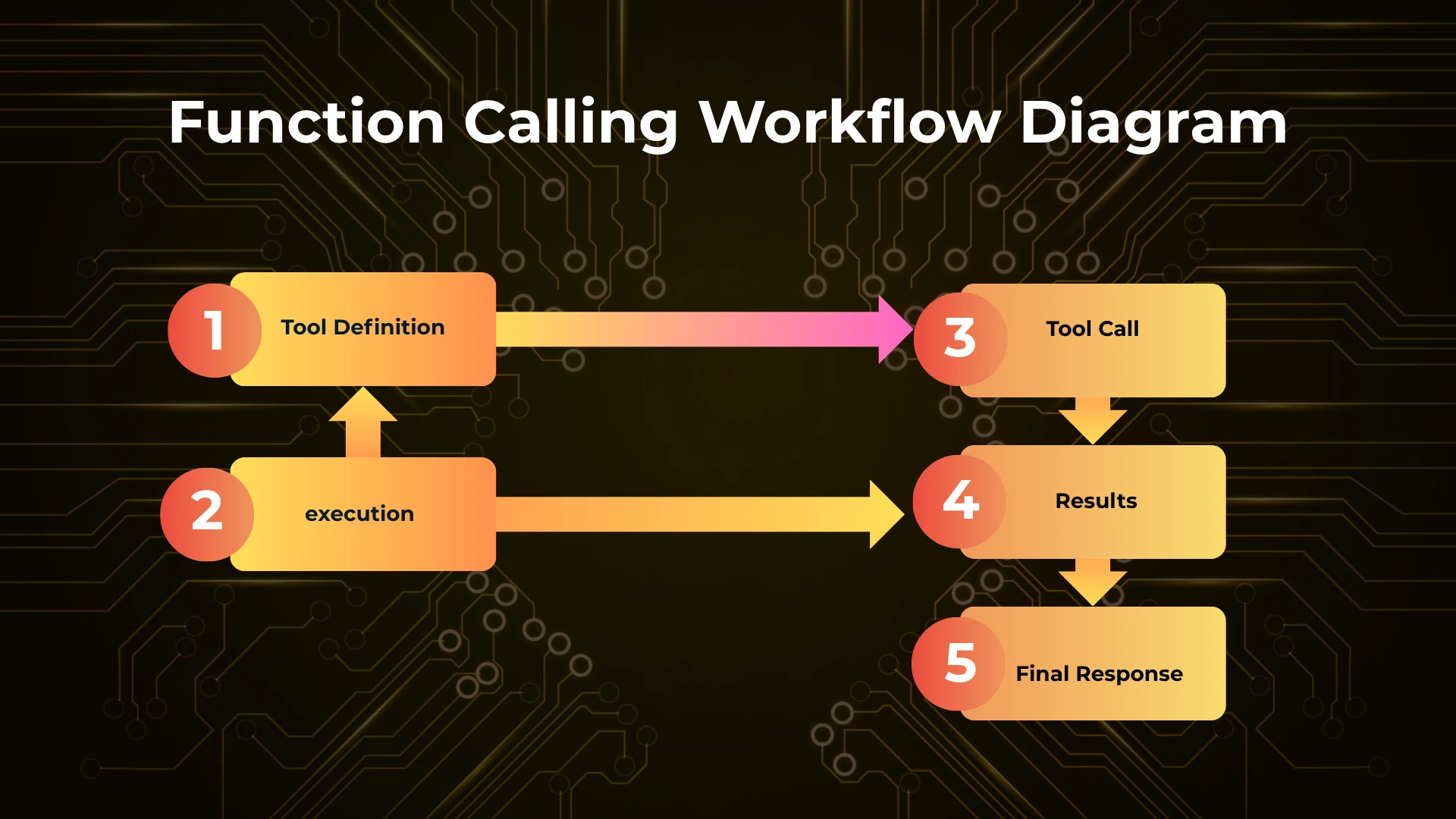

The Tool Use Workflow

The general sequence is:

- Define a Tool — The developer provides the agent with callable functions and their JSON schemas.

- Reasoning & Selection — The LLM decides which function to use based on context.

- Function Invocation — The model calls the defined tool with structured arguments.

- Execution — The external system performs the task and returns results.

- Structured Output — The model parses the response and delivers a final, validated answer.

How Function Calling Works

At its core, function calling bridges reasoning and execution.

The model doesn’t directly run code; instead, it returns a JSON-formatted specification of the function it wants to call, which the host system executes.

Below is a simplified, real OpenAI Python example demonstrating this process.

Example: Calling an External Function

from openai import OpenAI

import json

client = OpenAI()

# Step 1: Define available tools

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get the current temperature in a given city.",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City name"},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]}

},

"required": ["location"] }}

}]

# Step 2: Define a function the model can call

def get_weather(location, unit="celsius"):

if location.lower() == "paris":

return {"temperature": 14, "unit": unit}

return {"temperature": 25, "unit": unit}

# Step 3: Run a reasoning + function call cycle

response = client.responses.create(

model="gpt-4o-2024-08-06",

input=[

{"role": "system", "content": "You are a helpful weather assistant."},

{"role": "user", "content": "What’s the weather in Paris?"}

],

tools=tools

)

# Step 4: Extract function call from the model

tool_call = response.output[0].content[0].tool_call

args = json.loads(tool_call.function.arguments)

# Step 5: Execute and return result

result = get_weather(**args)

print(result)

What happens:

- The model recognizes it needs external data.

- It selects the get_weather function and returns structured arguments in JSON.

The host system runs that function, retrieves the result, and provides a reliable structured answer.

Structured Outputs — Reliable Responses for Autonomous Agents

While tool calling allows action, structured outputs ensure those actions produce consistent, machine-readable results.

Instead of free-form text, the model outputs a typed object that can be validated against a schema — using frameworks like Pydantic (Python) or Zod (JavaScript).

Why Structured Outputs Matter

- Reliable type-safety:

No more guessing — every output conforms to a predefined format. - Explicit refusals:

If the AI cannot comply safely, it returns a “refusal” object rather than generating unsafe data. - Simpler prompting:

No need for verbose, manually enforced formatting. The schema does it automatically.

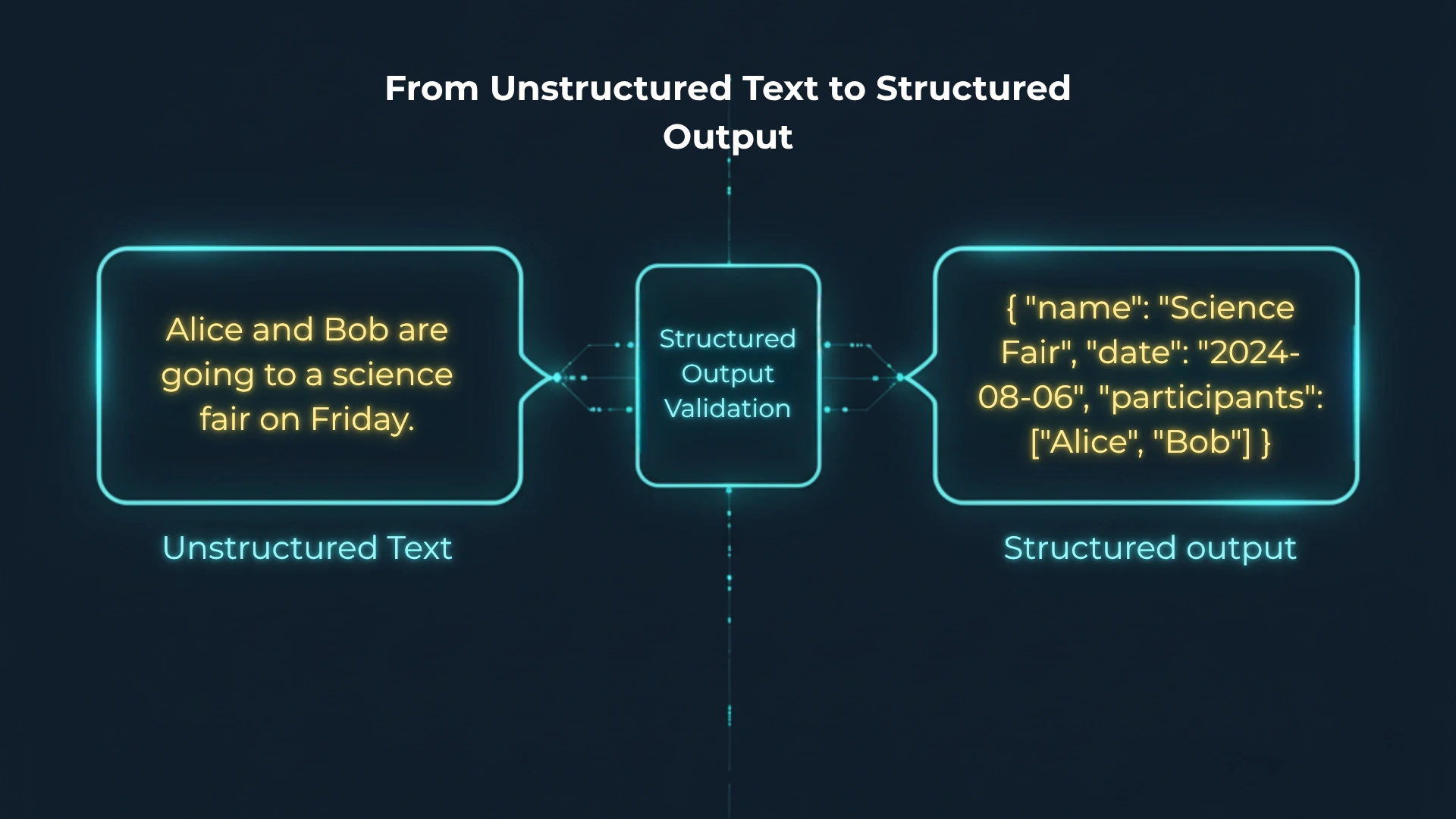

Example: Structured Output for an Event Extractor

from openai import OpenAI

from pydantic import BaseModel

client = OpenAI()

# Define a Pydantic schema for structured output

class CalendarEvent(BaseModel):

name: str

date: str

participants: list[str]

# Prompt model to extract structured data

response = client.responses.parse(

model="gpt-4o-2024-08-06",

input=[

{"role": "system", "content": "Extract the event information."},

{"role": "user", "content": "Alice and Bob are going to a science fair on Friday, 2024-08-06."}

],

text_format=CalendarEvent

)

event = response.output_parsed

print(event)

Structured Output Result:

{

"name": "Science Fair",

"date": "2024-08-06",

"participants": ["Alice", "Bob"]

}

The agent now provides deterministic data that can be stored in a database, calendar API, or planning system.

Handling Refusals & Safety Checks

Structured outputs also enable models to refuse unsafe requests programmatically:

if hasattr(event, "refusal"):

print("Model refused to generate output due to policy restrictions.")

This ensures compliance and safety for enterprise systems where trust and traceability matter.

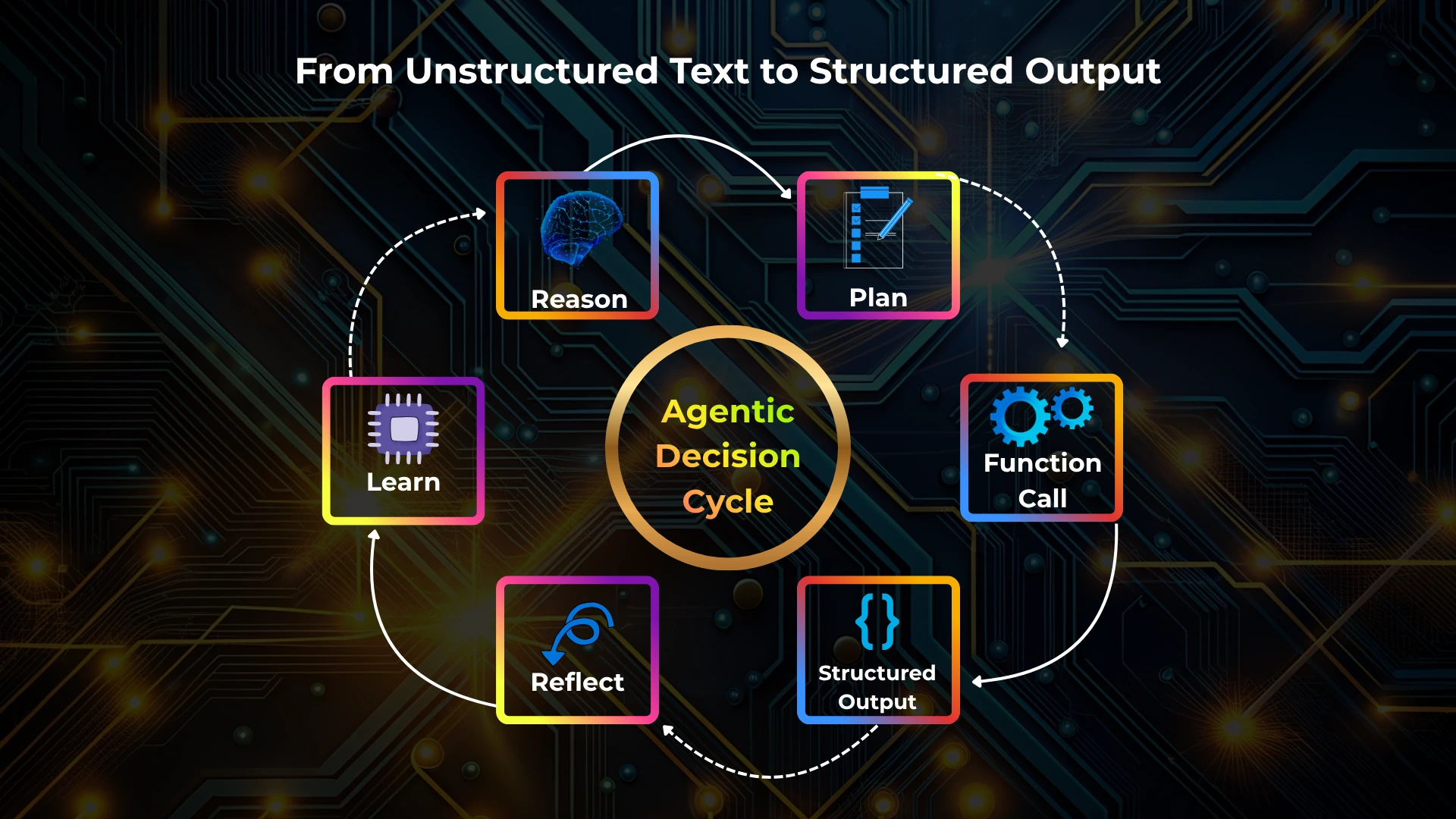

Connecting Reasoning to Tool Use

Reasoning patterns like ReAct, Reflexion, and Plan-and-Execute determine when an agent decides to call a tool.

For example:

- ReAct: Think → Act → Observe → Repeat.

- Reflexion: Think → Act → Reflect → Improve.

Agents often generate a “thought” trace, then call a function when they recognize a task (like retrieval, computation, or data transformation) that requires external assistance.

Reasoning-to-Action Workflow

- Reason: Identify the goal.

- Plan: Choose the right function/tool.

- Act: Call the function via API.

- Observe: Receive structured output.

- Reflect: Evaluate success and update context.

Frameworks like LangGraph visualize this reasoning chain, while Semantic Kernel enforces controlled execution and policy governance.

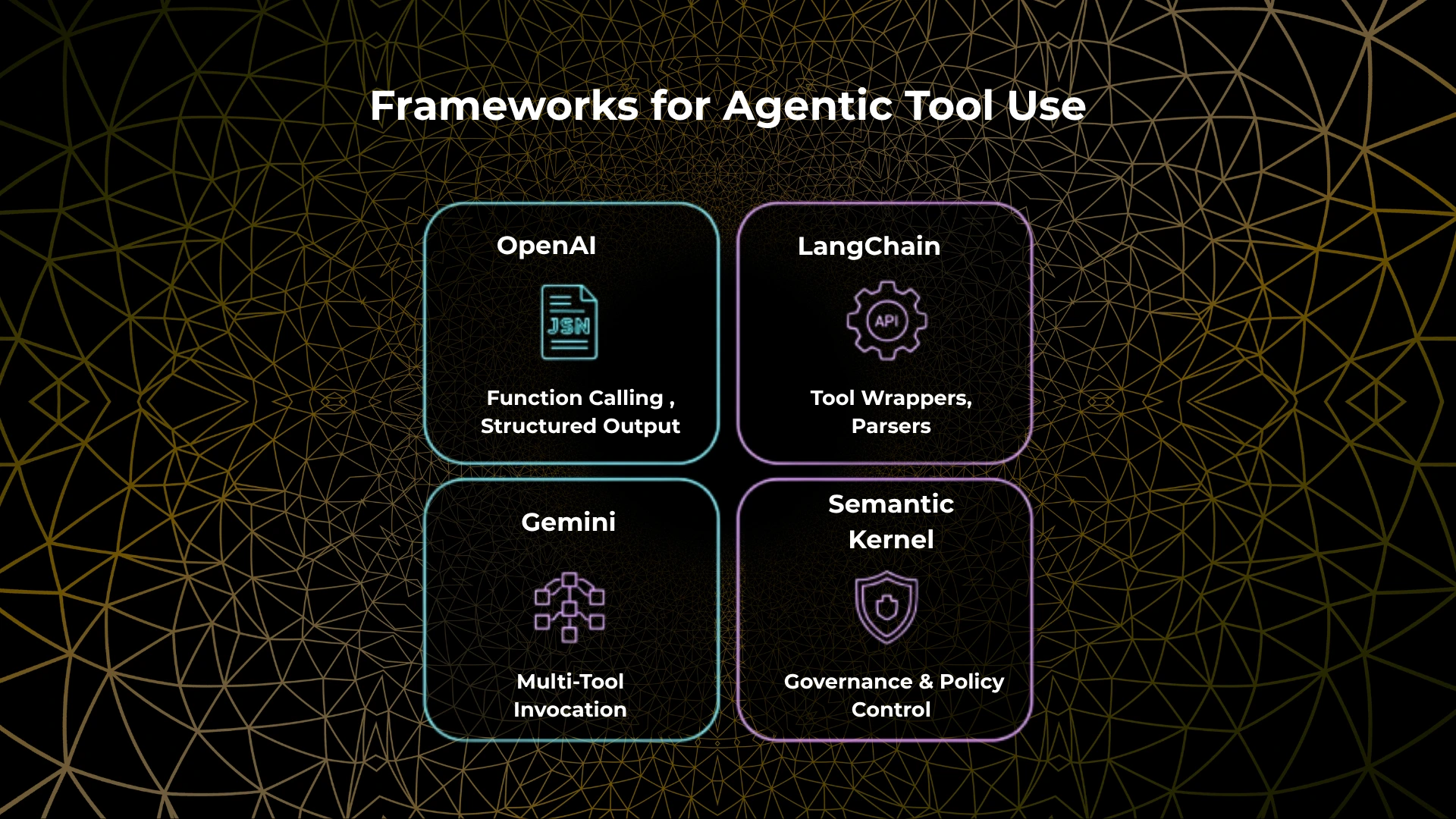

Frameworks Supporting Tool Use and Structured Outputs

1. OpenAI Function Calling

OpenAI’s function calling uses JSON schema definitions to connect GPT-4 and GPT-5 models with external APIs.

It supports:

- Multi-tool chaining

- Structured outputs

- Schema validation and refusals

Documentation: OpenAI Function Calling Guide

2. LangChain

LangChain provides a modular API for integrating tools and handling structured outputs.

It includes:

- StructuredOutputParser for automatic schema parsing

- Integration with ReAct and Reflexion reasoning agents

- Memory support for chaining multiple tool calls

Example (LangChain Python):

from langchain.output_parsers import StructuredOutputParser, ResponseSchema response_schemas = [ ResponseSchema(name="city", description="Name of the city"), ResponseSchema(name="temperature", description="Current temperature in Celsius") ] parser = StructuredOutputParser.from_response_schemas(response_schemas)

3. Google Gemini Function Calling

Gemini models also support JSON-structured function invocation.

Developers define callable endpoints using the Vertex AI SDK, connecting multimodal reasoning with API calls.

Use case: calling maps APIs, spreadsheet tools, or GCP data sources directly through structured reasoning.

4. Semantic Kernel

Microsoft’s Semantic Kernel framework integrates function calling with enterprise-grade governance.

It ensures:

- Tool invocation within defined safety policies

- Memory persistence and context passing

Human-in-the-loop validation for sensitive actions

5. CrewAI

CrewAI enables multi-agent collaboration where each agent uses different tools.

Example:

- Researcher → Search API

- Planner → Task scheduler

- Analyst → Structured output summarizer

Agents communicate structured data through shared memory, ensuring collaboration with minimal error propagation.

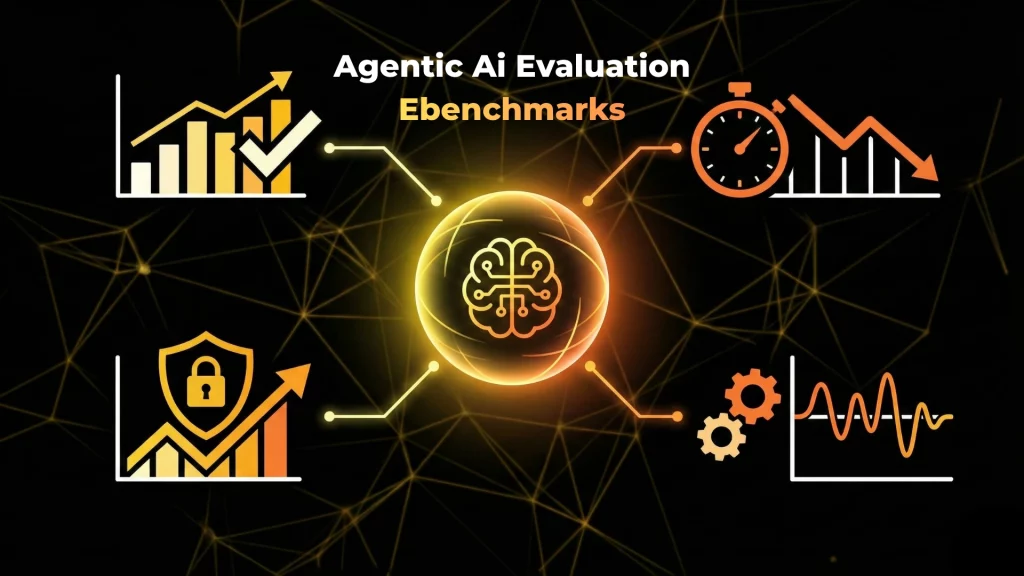

Why Structured Outputs Matter for Agentic AI

| Benefit | Description |

| Transparency | Each tool call and result is logged in structured form. |

| Governance | Policies define which tools an agent can access. |

| Scalability | Structured schemas let systems evolve without retraining models. |

| Integration | Easy data exchange with CRMs, analytics, and external APIs. |

| Reliability | Deterministic schema prevents broken workflows. |

Future of Tool Use in Agentic Systems

The frontier of Agentic AI lies in multi-function chaining and self-orchestrating systems.

Future models will dynamically compose APIs, reason about outcomes, and autonomously retry or validate structured results.

We’re heading toward a world where AI doesn’t just generate — it builds, acts, and learns safely through structured, verifiable tool use.

Conclusion — The Bridge Between Reasoning and Reality

Tool use and structured outputs are the backbone of Agentic AI autonomy.

They transform abstract reasoning into verifiable action.

Through function calling, schema validation, and orchestration frameworks, AI systems evolve from conversational assistants into intelligent collaborators that understand context, call tools responsibly, and return structured, trustworthy results.

You can also follow us on Facebook, Instagram, Twitter, Linkedin, or YouTube.

Frequently Asked Questions

It’s how an agent invokes external functions (APIs, tools) through structured JSON instructions rather than plain text.

They ensure AI responses follow a strict schema, improving reliability and machine readability.

They use schema enforcement (Pydantic, Zod) and validation to convert text into typed objects.

Function calling handles execution, while structured outputs handle format and validation of results.

OpenAI, LangChain, Gemini, Semantic Kernel, and CrewAI are leading implementations.